58.fetch phbase

1、fetch phbase工作流程

- The coordinating node identifies which documents need to be fetched and issues a multi GETrequest to the relevant shards.

- Each shard loads the documents and enriches them, if required, and then returns the documents to the coordinating node.

- Once all documents have been fetched, the coordinating node returns the results to the client.

The coordinating node first decides which documents actually need to be fetched. For instance, if our query specified { "from": 90, "size": 10 }, the first 90 results would be discarded and only the next 10 results would need to be retrieved. These documents may come from one, some, or all of the shards involved in the original search request.

The coordinating node builds a multi-get request for each shard that holds a pertinent document and sends the request to the same shard copy that handled the query phase.

The shard loads the document bodies—the _source field—and, if requested, enriches the results with metadata and search snippet highlighting. Once the coordinating node receives all results, it assembles them into a single response that it returns to the client.

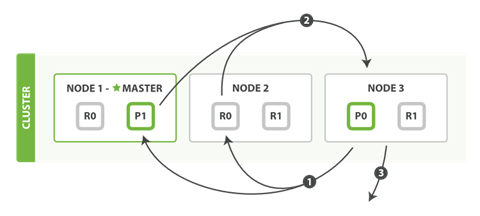

(1)各个shard 返回的只是各文档的id和排序值( IDs and sort values ,coordinate node根据这些id&sort values 构建完priority queue之后,然后把程序需要的document 的id发送mget请求去所有shard上获取对应的document

(2)各个shard将document返回给coordinate node

(3)coordinate node将合并后的document结果返回给client客户端

2、一般搜索,如果不加from和size,就默认搜索前10条,按照_score排序

延伸阅读

The query-then-fetch process supports pagination with the from and size parameters, but within limits. Remember that each shard must build a priority queue of length from + size, all of which need to be passed back to the coordinating node. And the coordinating node needs to sort through number_of_shards * (from + size) documents in order to find the correct size documents.

Depending on the size of your documents, the number of shards, and the hardware you are using, paging 10,000 to 50,000 results (1,000 to 5,000 pages) deep should be perfectly doable. But with big-enough from values, the sorting process can become very heavy indeed, using vast amounts of CPU, memory, and bandwidth. For this reason, we strongly advise against deep paging.

In practice, "deep pagers" are seldom human anyway. A human will stop paging after two or three pages and will change the search criteria. The culprits are usually bots or web spiders that tirelessly keep fetching page after page until your servers crumble at the knees.

If you do need to fetch large numbers of docs from your cluster, you can do so efficiently by disabling sorting with the scroll query, which we discuss later in this chapter.

最新文章

- SpringMVC一路总结(二)

- 网站访问量大 怎样优化mysql数据库

- 关于IE处理margin和padding值超出父元素高度的问题

- [AS3] 问个很囧的问题: 如何遍历Dictionary?

- c++find函数用法

- pgsql自动安装shell脚本整理

- java基础之注解

- nodejs启动守护程序pm2

- sql基础之DDL(Data Definition Languages)

- JAVA在win10上的安装环境配置

- GotoAndPlay

- Python_csv电子表格

- 腾讯云申请SSL证书与Nginx配置Https

- jvm的解释执行与编译执行

- 关于微信小程序使用canvas生成图片,内容图片跨域的问题

- 理解express中的中间件

- mysql中的游标使用

- vi 命令 行首、行尾

- Delphi: TLabel设置EllipsisPosition属性用...显示过长文本时,以Hint显示其全文本

- 在 Spring 4.3.9下升级 Velocity 1.7.x to Velocity 2.0.x 出现的问题