HiBench成长笔记——(3) HiBench测试Spark

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html

创建并修改配置文件conf/spark.conf

cp conf/spark.conf.template conf/spark.conf

参考:https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md,设置属性为下列值

# Spark home hibench.spark.home /opt/cloudera/parcels/CDH--.cdh5./lib/spark # Spark master # standalone mode: spark://xxx:7077 # YARN mode: yarn-client hibench.spark.master yarn-client

执行脚本

bin/workloads/micro/wordcount/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Input Deleted hdfs://node1:8020/HiBench/Wordcount/Input Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /opt/cloudera/parcels/CDH--.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-examples--cdh5. -D mapreduce.randomtextwriter.bytespermap= -D mapreduce.job.maps= -D mapreduce.job.reduces= hdfs://node1:8020/HiBench/Wordcount/Input The job took seconds. finish HadoopPrepareWordcount bench

执行脚本

bin/workloads/micro/wordcount/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Output Deleted hdfs://node1:8020/HiBench/Wordcount/Output hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Wordcount/Input Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/wordcount/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar hdfs://node1:8020/HiBench/Wordcount/Input hdfs://node1:8020/HiBench/Wordcount/Output // :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon. finish ScalaSparkWordcount bench

查看report/hibench.report

Type Date Time Input_data_size Duration(s) Throughput(bytes/s) Throughput/node HadoopWordcount -- :: ScalaSparkWordcount -- ::

\report\wordcount\spark下有多个文件:monitor.log是原始日志,bench.log是scheduler.DAGScheduler和scheduler.TaskSetManager信息,monitor.html可视化了系统的性能信息,\conf\wordcount.conf、\conf\sparkbench\spark.conf和\conf\sparkbench\sparkbench.conf是本次任务的环境变量

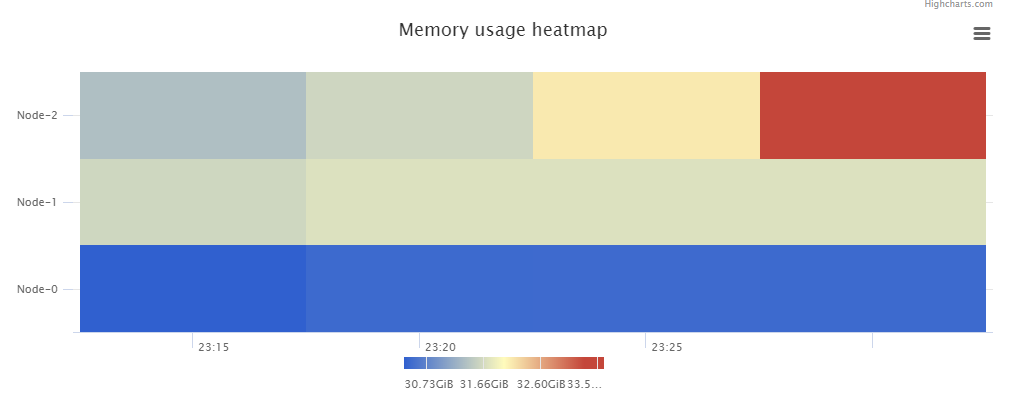

monitor.html中包含了Memory usage heatmap等统计图:

根据官方文档 https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md ,还可以修改 hibench.scale.profile 调整测试的数据规模,修改 hibench.default.map.parallelism 和 hibench.default.shuffle.parallelism 调整并行化,修改hibench.yarn.executor.num、hibench.yarn.executor.cores、spark.executor.memory和spark.driver.memory控制Spark executor 的数量、核数、内存和driver的内存。

最新文章

- HTML5之文件API

- 使用AssetsLibrary.Framework创建多图片选择控制器(翻译)

- Python科学计算——前期准备

- myBatis 实现用户表增操作(复杂型)

- 关于deferred

- 【Todo】用python进行机器学习数据模拟及逻辑回归实验

- Android查询:模拟键盘鼠标事件(adb shell 实现)

- hibernate面试笔记

- [cocos2d] 利用texture atlases生成动画

- html网页代码各种名称及作用

- java学习笔记(12) —— Struts2 通过 xml /json 实现简单的业务处理

- compass模块----Utilities----Sprites精灵图合图

- localStorge它storage事件

- C - 哗啦啦村的扩建

- [转] spring事务管理几种方式

- python_ 函数

- requests库入门08-delete请求

- 使用docker查看jvm状态,在docker中使用jmap,jstat

- leetcode — reverse-nodes-in-k-group

- 使用intellij idea搭建spring-springmvc-mybatis整合框架环境