Pytorch1.0入门实战三:ResNet实现cifar-10分类,利用visdom可视化训练过程

2024-09-05 12:26:40

人的理想志向往往和他的能力成正比。 —— 约翰逊

最近一直在使用pytorch深度学习框架,很想用pytorch搞点事情出来,但是框架中一些基本的原理得懂!本次,利用pytorch实现ResNet神经网络对cifar-10数据集进行分类。CIFAR-10包含60000张32*32的彩色图像,彩色图像,即分别有RGB三个通道,一共有10类图片,每一类图片有6000张,其类别有飞机、鸟、猫、狗等。

注意,如果直接使用torch.torchvision的models中的ResNet18或者ResNet34等等,你会遇到最后的特征图大小不够用的情况,因为cifar-10的图像大小只有32*32,因此需要单独设计ResNet的网络结构!但是采用其他的数据集,比如imagenet的数据集,其图的大小为224*224便不会遇到这种情况。

1、运行环境:

- python3.6.8

- win10

- GTX1060

- cuda9.0+cudnn7.4+vs2017

- torch1.0.1

- visdom0.1.8.8

2、实战cifar10步骤如下:

- 使用torchvision加载并预处理CIFAR-10数据集

- 定义网络

- 定义损失函数和优化器

- 训练网络,计算损失,清除梯度,反向传播,更新网络参数

- 测试网络

3、代码

import torch

import torch.nn as nn

from torch.autograd import Variable

from torchvision import datasets,transforms

from torch.utils.data import dataloader

import torchvision.models as models

from tqdm import tgrange

import torch.optim as optim

import numpy

import visdom

import torch.nn.functional as F vis = visdom.Visdom()

batch_size = 100

lr = 0.001

momentum = 0.9

epochs = 100 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') def conv3x3(in_channels,out_channels,stride = 1):

return nn.Conv2d(in_channels,out_channels,kernel_size=3, stride = stride, padding=1, bias=False)

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride = 1, shotcut = None):

super(ResidualBlock, self).__init__()

self.conv1 = conv3x3(in_channels, out_channels,stride)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True) self.conv2 = conv3x3(out_channels, out_channels)

self.bn2 = nn.BatchNorm2d(out_channels)

self.shotcut = shotcut def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.shotcut:

residual = self.shotcut(x)

out += residual

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, layer, num_classes = 10):

super(ResNet, self).__init__()

self.in_channels = 16

self.conv = conv3x3(3,16)

self.bn = nn.BatchNorm2d(16)

self.relu = nn.ReLU(inplace=True) self.layer1 = self.make_layer(block, 16, layer[0])

self.layer2 = self.make_layer(block, 32, layer[1], 2)

self.layer3 = self.make_layer(block, 64, layer[2], 2)

self.avg_pool = nn.AvgPool2d(8)

self.fc = nn.Linear(64, num_classes) def make_layer(self, block, out_channels, blocks, stride = 1):

shotcut = None

if(stride != 1) or (self.in_channels != out_channels):

shotcut = nn.Sequential(

nn.Conv2d(self.in_channels, out_channels,kernel_size=3,stride = stride,padding=1),

nn.BatchNorm2d(out_channels)) layers = []

layers.append(block(self.in_channels, out_channels, stride, shotcut)) for i in range(1, blocks):

layers.append(block(out_channels, out_channels))

self.in_channels = out_channels

return nn.Sequential(*layers) def forward(self, x):

x = self.conv(x)

x = self.bn(x)

x = self.relu(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.avg_pool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x #标准化数据集

data_tf = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]) train_dataset = datasets.CIFAR10(root = './datacifar/',

train=True,

transform = data_tf,

download=False) test_dataset =datasets.CIFAR10(root = './datacifar/',

train=False,

transform= data_tf,

download=False)

# print(test_dataset[0][0])

# print(test_dataset[0][0][0])

print("训练集的大小:",len(train_dataset),len(train_dataset[0][0]),len(train_dataset[0][0][0]),len(train_dataset[0][0][0][0]))

print("测试集的大小:",len(test_dataset),len(test_dataset[0][0]),len(test_dataset[0][0][0]),len(test_dataset[0][0][0][0]))

#建立一个数据迭代器

train_loader = torch.utils.data.DataLoader(dataset = train_dataset,

batch_size = batch_size,

shuffle = True)

test_loader = torch.utils.data.DataLoader(dataset = test_dataset,

batch_size = batch_size,

shuffle = False)

'''

print(train_loader.dataset)

---->

Dataset CIFAR10

Number of datapoints: 50000

Split: train

Root Location: ./datacifar/

Transforms (if any): Compose(

ToTensor()

Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

)

Target Transforms (if any): None

''' model = ResNet(ResidualBlock, [3,3,3], 10).to(device) criterion = nn.CrossEntropyLoss()#定义损失函数

optimizer = optim.SGD(model.parameters(),lr=lr,momentum=momentum)

print(model) if __name__ == '__main__':

global_step = 0

for epoch in range(epochs):

for i,train_data in enumerate(train_loader):

# print("i:",i)

# print(len(train_data[0]))

# print(len(train_data[1]))

inputs,label = train_data

inputs = Variable(inputs).cuda()

label = Variable(label).cuda()

# print(model)

output = model(inputs)

# print(len(output)) loss = criterion(output,label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 100 == 99:

print('epoch:%d | batch: %d | loss:%.03f' % (epoch + 1, i + 1, loss.item()))

vis.line(X=[global_step],Y=[loss.item()],win='loss',opts=dict(title = 'train loss'),update='append')

global_step = global_step +1

# 验证测试集 model.eval() # 将模型变换为测试模式

correct = 0

total = 0

for data_test in test_loader:

images, labels = data_test

images, labels = Variable(images).cuda(), Variable(labels).cuda()

output_test = model(images)

# print("output_test:",output_test.shape)

_, predicted = torch.max(output_test, 1) # 此处的predicted获取的是最大值的下标

# print("predicted:", predicted)

total += labels.size(0)

correct += (predicted == labels).sum()

print("correct1: ", correct)

print("Test acc: {0}".format(correct.item() / len(test_dataset))) # .cpu().numpy()

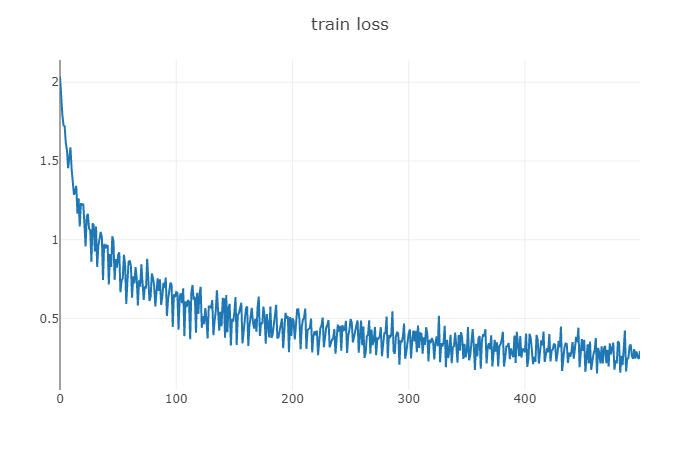

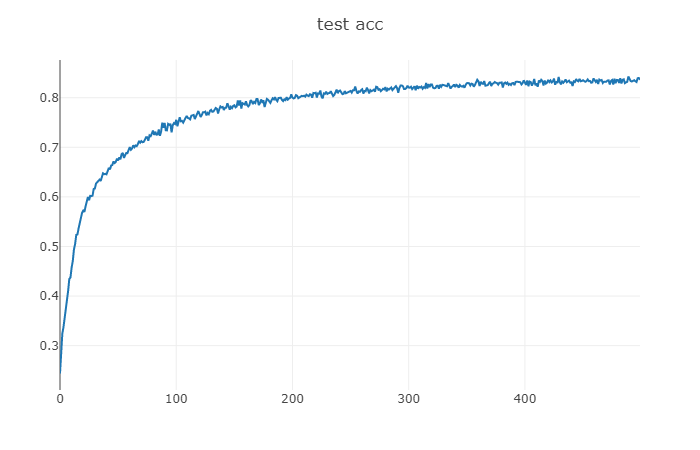

4、结果展示

loss值 epoch:100 | batch: 500 | loss:0.294

test acc epoch: 100 test acc: 0.8363

5、网络结构

ResNet(

(conv): Conv2d(3, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(16, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shotcut): Sequential(

(0): Conv2d(16, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shotcut): Sequential(

(0): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avg_pool): AvgPool2d(kernel_size=8, stride=8, padding=0)

(fc): Linear(in_features=64, out_features=10, bias=True)

)

最新文章

- [基础] Array.prototype.indexOf()查询方式

- Xcode 7如何 免费 真机调试iOS应用

- Mysql游标阻止执行最后一次

- js 页码分页的前端写法

- SQL server 创建 修改表格 及表格基本增删改查 及 高级查询 及 (数学、字符串、日期时间)函数[转]

- hdu 1026 Ignatius and the Princess I (bfs+记录路径)(priority_queue)

- Linux多线程下载工具Axel

- Multiplication Puzzle

- HDU 4611 - Balls Rearrangement(2013MUTC2-1001)(数学,区间压缩)

- javascript之闭包深入理解(一)

- html拼接数据的时候一定要注意null值的问题

- Grid++Report 报表开发工具

- sublime text 调出结果输出框

- flex 增长与收缩

- 【CSA72G】【XSY3316】rectangle 线段树 最小生成树

- MySQL-连表查询联系

- VisualStudio移动开发(C#、VB.NET)Smobiler开发平台——BarcodeView控件的使用方式,.Net移动开发

- P1220 关路灯 (区间dp)

- input,textarea在ios和Android上阴影和边框的处理方法(在移动端)

- 吴裕雄 实战PYTHON编程(5)

热门文章

- 数据库中char和varchar的区别

- 基于node.js的websocket 前后端交互小功能

- Neko Performs Cat Furrier Transform CodeForces - 1152B 二进制思维题

- Game HDU - 5242 树链思想

- go之基本数据类型

- 微信小程序_(校园视)开发上传视频业务

- Vue_(组件通讯)父子组件简单关系

- $\LaTeX$数学公式大全8

- 12.数值的整数次方 Java

- Note 1 for <Pratical Programming : An Introduction to Computer Science Using Python 3>