Exercise : Self-Taught Learning

First, you will train your sparse autoencoder on an "unlabeled" training dataset of handwritten digits. This produces feature that are penstroke-like. We then extract these learned features from a labeled dataset of handwritten digits. These features will then be used as inputs to the softmax classifier that you wrote in the previous exercise.

Concretely, for each example in the the labeled training dataset  , we forward propagate the example to obtain the activation of the hidden units

, we forward propagate the example to obtain the activation of the hidden units  . We now represent this example using

. We now represent this example using  (the "replacement" representation), and use this to as the new feature representation with which to train the softmax classifier.

(the "replacement" representation), and use this to as the new feature representation with which to train the softmax classifier.

Finally, we also extract the same features from the test data to obtain predictions.

In this exercise, our goal is to distinguish between the digits from 0 to 4. We will use the digits 5 to 9 as our "unlabeled" dataset with which to learn the features; we will then use a labeled dataset with the digits 0 to 4 with which to train the softmax classifier.

Step 1: Generate the input and test data sets

Step 2: Train the sparse autoencoder

use the unlabeled data (the digits from 5 to 9) to train a sparse autoencoder

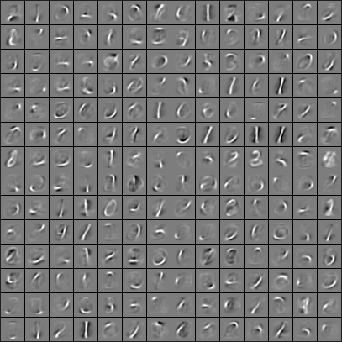

When training is complete, you should get a visualization of pen strokes like the image shown below:

Informally, the features learned by the sparse autoencoder should correspond to penstrokes.

Step 3: Extracting features

After the sparse autoencoder is trained, you will use it to extract features from the handwritten digit images.

Step 4: Training and testing the logistic regression model

Use your code from the softmax exercise (softmaxTrain.m) to train a softmax classifier using the training set features (trainFeatures) and labels (trainLabels).

Step 5: Classifying on the test set

Finally, complete the code to make predictions on the test set (testFeatures) and see how your learned features perform! If you've done all the steps correctly, you should get an accuracy of about 98% percent.

code

%% CS294A/CS294W Self-taught Learning Exercise % Instructions

% ------------

%

% This file contains code that helps you get started on the

% self-taught learning. You will need to complete code in feedForwardAutoencoder.m

% You will also need to have implemented sparseAutoencoderCost.m and

% softmaxCost.m from previous exercises.

%

%% ======================================================================

% STEP : Here we provide the relevant parameters values that will

% allow your sparse autoencoder to get good filters; you do not need to

% change the parameters below. inputSize = * ;

numLabels = ;

hiddenSize = ;

sparsityParam = 0.1; % desired average activation of the hidden units.

% (This was denoted by the Greek alphabet rho, which looks like a lower-case "p",

% in the lecture notes).

lambda = 3e-; % weight decay parameter

beta = ; % weight of sparsity penalty term

maxIter = ; %% ======================================================================

% STEP : Load data from the MNIST database

%

% This loads our training and test data from the MNIST database files.

% We have sorted the data for you in this so that you will not have to

% change it. % Load MNIST database files

mnistData = loadMNISTImages('train-images.idx3-ubyte');

mnistLabels = loadMNISTLabels('train-labels.idx1-ubyte'); % Set Unlabeled Set (All Images) % Simulate a Labeled and Unlabeled set

labeledSet = find(mnistLabels >= & mnistLabels <= );

unlabeledSet = find(mnistLabels >= ); %%增加的一行代码

unlabeledSet = unlabeledSet(:end/); numTest = round(numel(labeledSet)/);%拿一半的样本来训练%

numTrain = round(numel(labeledSet)/);

trainSet = labeledSet(:numTrain);

testSet = labeledSet(numTrain+:*numTrain); unlabeledData = mnistData(:, unlabeledSet);%%为什么这两句连在一起都要出错呢?

% pack;

trainData = mnistData(:, trainSet);

trainLabels = mnistLabels(trainSet)' + 1; % Shift Labels to the Range 1-5 % mnistData2 = mnistData;

testData = mnistData(:, testSet);

testLabels = mnistLabels(testSet)' + 1; % Shift Labels to the Range 1-5 % Output Some Statistics

fprintf('# examples in unlabeled set: %d\n', size(unlabeledData, ));

fprintf('# examples in supervised training set: %d\n\n', size(trainData, ));

fprintf('# examples in supervised testing set: %d\n\n', size(testData, )); %% ======================================================================

% STEP : Train the sparse autoencoder

% This trains the sparse autoencoder on the unlabeled training

% images. % Randomly initialize the parameters

theta = initializeParameters(hiddenSize, inputSize); %% ----------------- YOUR CODE HERE ----------------------

% Find opttheta by running the sparse autoencoder on

% unlabeledTrainingImages opttheta = theta;

addpath minFunc/

options.Method = 'lbfgs';

options.maxIter = ;

options.display = 'on';

[opttheta, loss] = minFunc( @(p) sparseAutoencoderLoss(p, ...

inputSize, hiddenSize, ...

lambda, sparsityParam, ...

beta, unlabeledData), ...

theta, options); %% ----------------------------------------------------- % Visualize weights

W1 = reshape(opttheta(:hiddenSize * inputSize), hiddenSize, inputSize);

display_network(W1'); %%======================================================================

%% STEP : Extract Features from the Supervised Dataset

%

% You need to complete the code in feedForwardAutoencoder.m so that the

% following command will extract features from the data. trainFeatures = feedForwardAutoencoder(opttheta, hiddenSize, inputSize, ...

trainData); testFeatures = feedForwardAutoencoder(opttheta, hiddenSize, inputSize, ...

testData); %%======================================================================

%% STEP : Train the softmax classifier softmaxModel = struct;

%% ----------------- YOUR CODE HERE ----------------------

% Use softmaxTrain.m from the previous exercise to train a multi-class

% classifier. % Use lambda = 1e- for the weight regularization for softmax

lambda = 1e-;

inputSize = hiddenSize;

numClasses = numel(unique(trainLabels));%unique为找出向量中的非重复元素并进行排序 % You need to compute softmaxModel using softmaxTrain on trainFeatures and

% trainLabels % You need to compute softmaxModel using softmaxTrain on trainFeatures and

% trainLabels options.maxIter = ;

softmaxModel = softmaxTrain(inputSize, numClasses, lambda, ...

trainFeatures, trainLabels, options); %% ----------------------------------------------------- %%======================================================================

%% STEP : Testing %% ----------------- YOUR CODE HERE ----------------------

% Compute Predictions on the test set (testFeatures) using softmaxPredict

% and softmaxModel [pred] = softmaxPredict(softmaxModel, testFeatures); %% ----------------------------------------------------- % Classification Score

fprintf('Test Accuracy: %f%%\n', *mean(pred(:) == testLabels(:))); % (note that we shift the labels by , so that digit now corresponds to

% label )

%

% Accuracy is the proportion of correctly classified images

% The results for our implementation was:

%

% Accuracy: 98.3%

%

%function [activation] = feedForwardAutoencoder(theta, hiddenSize, visibleSize, data) % theta: trained weights from the autoencoder

% visibleSize: the number of input units (probably )

% hiddenSize: the number of hidden units (probably )

% data: Our matrix containing the training data as columns. So, data(:,i) is the i-th training example. % We first convert theta to the (W1, W2, b1, b2) matrix/vector format, so that this

% follows the notation convention of the lecture notes. W1 = reshape(theta(:hiddenSize*visibleSize), hiddenSize, visibleSize);

b1 = theta(*hiddenSize*visibleSize+:*hiddenSize*visibleSize+hiddenSize); %% ---------- YOUR CODE HERE --------------------------------------

% Instructions: Compute the activation of the hidden layer for the Sparse Autoencoder.

activation = sigmoid(W1*data+repmat(b1,[,size(data,)])); %------------------------------------------------------------------- end %-------------------------------------------------------------------

% Here's an implementation of the sigmoid function, which you may find useful

% in your computation of the costs and the gradients. This inputs a (row or

% column) vector (say (z1, z2, z3)) and returns (f(z1), f(z2), f(z3)). function sigm = sigmoid(x)

sigm = ./ ( + exp(-x));

end

最新文章

- CF 676B Pyramid of Glasses[模拟]

- Java for LeetCode 211 Add and Search Word - Data structure design

- PE文件信息获取工具-PEINFO

- CentOS 6.0 缺少 mcrypt 扩展 解决办法

- HybridApp开发准备工作——WebView

- Android02-Activity01

- web开发注意的一些事

- C++基础梳理--Class、Struct、Union

- Java引领新生活

- Flask+uwsgi+Nginx+Ubuntu部署

- Struts 2 之类型转换器

- 「2017 Multi-University Training Contest 8」2017多校训练8

- BZOJ3029守卫者的挑战(概率dp)

- 前端基础之jQuery操作标签

- 成员变量与局部变量的区别--------java基础总结

- JQuery Mobile 简单入门引导

- pyqt 实现的俄罗斯方块

- kafka linux 启动脚本 sample

- ORA-12541: TNS: 无监听程序、监听程序当前无法识别连接描述符中请求的服务

- 【HDU4565】So Easy!