爬虫_拉勾网(selenium)

2024-10-19 06:21:12

使用selenium进行翻页获取职位链接,再对链接进行解析

会爬取到部分空列表,感觉是网速太慢了,加了time.sleep()还是会有空列表

from selenium import webdriver

import requests

import re

from lxml import etree

import time

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By class LagouSpider(object):

def __init__(self):

opt = webdriver.ChromeOptions()

# 把chrome设置成无界面模式

opt.set_headless()

self.driver = webdriver.Chrome(options=opt)

self.url = 'https://www.lagou.com/jobs/list_爬虫?px=default&city=北京'

self.headers = {

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.75 Safari/537.36'

} def run(self):

self.driver.get(self.url)

while True:

html = ''

links = []

html = self.driver.page_source

links = self.get_one_page_links(html)

for link in links:

print('\n' + link+'\n')

self.parse_detail_page(link) WebDriverWait(self.driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, 'pager_next')))

next_page_btn = self.driver.find_element_by_class_name('pager_next') if 'pager_next_disabled' in next_page_btn.get_attribute('class'):

break

else:

next_page_btn.click()

time.sleep(1) def get_one_page_links(self, html):

links = []

hrefs = self.driver.find_elements_by_xpath('//a[@class="position_link"]')

for href in hrefs:

links.append(href.get_attribute('href'))

return links def parse_detail_page(self, url):

job_information = {}

response = requests.get(url, headers=self.headers) time.sleep(2)

html = response.text

html_element = etree.HTML(html)

job_name = html_element.xpath('//div[@class="job-name"]/@title')

job_description = html_element.xpath('//dd[@class="job_bt"]//p//text()')

for index, i in enumerate(job_description):

job_description[index] = re.sub('\xa0', '', i)

job_address = html_element.xpath('//div[@class="work_addr"]/a/text()')

job_salary = html_element.xpath('//span[@class="salary"]/text()') # 字符串处理去掉不必要的信息

for index, i in enumerate(job_address):

job_address[index] = re.sub('查看地图', '', i)

while '' in job_address:

job_address.remove('') job_information['job_name'] = job_name

job_information['job_description'] = job_description

job_information['job_address'] = job_address

job_information['job_salary'] = job_salary

print(job_information) def main():

spider = LagouSpider()

spider.run() if __name__ == '__main__':

main()

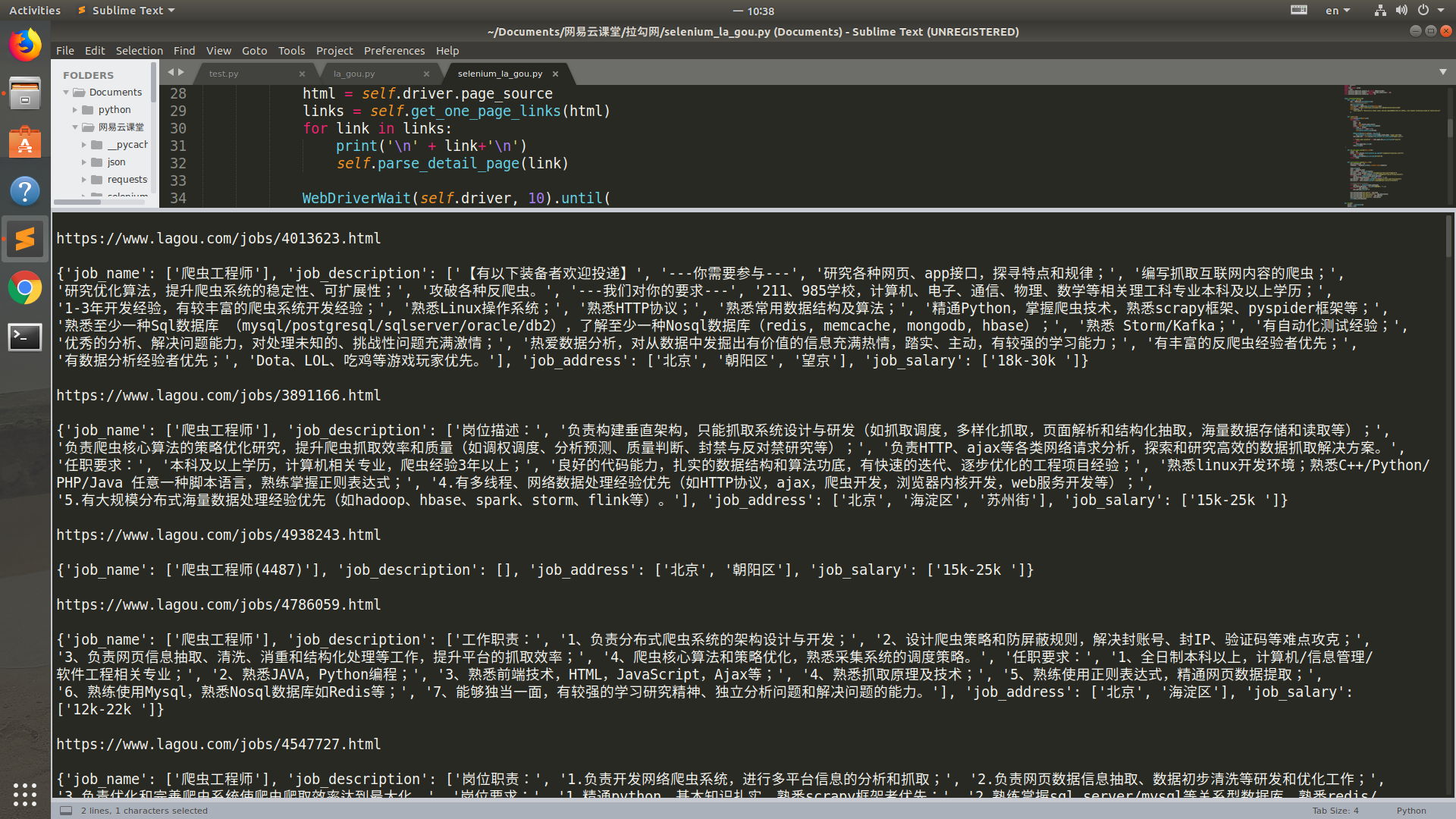

运行结果

最新文章

- Linux下配置python环境

- Windows 10 新特性 -- Bing Maps 3D地图开发入门(一)

- Design2:数据层次结构建模之二

- 操作系统开发系列—13.a.进程 ●

- AngularJS Filters

- 7个混合式HTML5移动开发框架

- NHibernate系列文章十四:NHibernate事务

- 接受客户端传的inputstream类型转成string类型

- redux-actions源码解读

- 转:精美jQuery插件及源码 前端开发福利

- Quartz.NET作业调度框架详解

- iOS 从app跳转到Safari、从app打开电话呼叫

- 关于css里的class和id

- vim简单使用教程【转】

- MySQL编码不一致导致查询结果为空

- [C语言]易错知识点、小知识点复习(1)

- mysql 自定义函数与自定义存储过程的调用方法

- 4-13 object类,继承和派生( super) ,钻石继承方法

- win7自带wifi win7无线网络共享设置图文方法

- Django请求响应对象