windows下用c++调用caffe做前向

参考博客:

https://blog.csdn.net/muyouhang/article/details/54773265

https://blog.csdn.net/hhh0209/article/details/79830988

新建caffe的属性表,caffe_gpu_x64_release.props

将NugetPackages,caffe,CUDA中的头文件加进去

属性-C/C++-附加包含目录:

D:\caffe20190311\NugetPackages\OpenCV.2.4.10\build\native\include

D:\caffe20190311\NugetPackages\OpenBLAS.0.2.14.1\lib\native\include

D:\caffe20190311\NugetPackages\protobuf-v120.2.6.1\build\native\include

D:\caffe20190311\NugetPackages\glog.0.3.3.0\build\native\include

D:\caffe20190311\NugetPackages\gflags.2.1.2.1\build\native\include

D:\caffe20190311\NugetPackages\boost.1.59.0.0\lib\native\include

D:\caffe20190311\caffe-master\include

D:\caffe20190311\caffe-master\include\caffe

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0\include

将NugetPackages,caffe生成的,CUDA中静态库加入进去

属性-链接器-常规-附加库目录:

D:\caffe20190311\NugetPackages\OpenCV.2.4.10\build\native\lib\x64\v120\Release

D:\caffe20190311\NugetPackages\hdf5-v120-complete.1.8.15.2\lib\native\lib\x64

D:\caffe20190311\NugetPackages\OpenBLAS.0.2.14.1\lib\native\lib\x64

D:\caffe20190311\NugetPackages\gflags.2.1.2.1\build\native\x64\v120\dynamic\Lib

D:\caffe20190311\NugetPackages\glog.0.3.3.0\build\native\lib\x64\v120\Release\dynamic

D:\caffe20190311\NugetPackages\protobuf-v120.2.6.1\build\native\lib\x64\v120\Release

D:\caffe20190311\NugetPackages\boost_chrono-vc120.1.59.0.0\lib\native\address-model-64\lib

D:\caffe20190311\NugetPackages\boost_system-vc120.1.59.0.0\lib\native\address-model-64\lib

D:\caffe20190311\NugetPackages\boost_thread-vc120.1.59.0.0\lib\native\address-model-64\lib

D:\caffe20190311\NugetPackages\boost_filesystem-vc120.1.59.0.0\lib\native\address-model-64\lib

D:\caffe20190311\NugetPackages\boost_date_time-vc120.1.59.0.0\lib\native\address-model-64\lib

D:\caffe20190311\caffe-master\Build\x64\Release

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0\lib\x64

将一些动态库加入进去

属性-链接器-输入-附加依赖项:

libcaffe.lib

libprotobuf.lib

libglog.lib

gflags.lib

libopenblas.dll.a

hdf5.lib

hdf5_hl.lib

cublas.lib

cublas_device.lib

cuda.lib

cudadevrt.lib

cudnn.lib

cudart.lib

cufft.lib

cudart_static.lib

cufftw.lib

cusparse.lib

cusolver.lib

curand.lib

nppc.lib

opencv_highgui2410.lib

opencv_core2410.lib

opencv_imgproc2410.lib

kernel32.lib

user32.lib

gdi32.lib

winspool.lib

comdlg32.lib

advapi32.lib

shell32.lib

ole32.lib

oleaut32.lib

uuid.lib

odbc32.lib

odbccp32.lib

若在没有GPU的电脑,用cpu调用caffe

将头文件,静态库目录,动态库,去掉和cuda相关的就行

caffe_cpu_x64_release.props

附加包含目录,去掉最后一排与CUDA相关的头文件目录

附加库目录,去掉最后一排与CUDA相关的静态库目录

附加依赖项:

libcaffe.lib

libprotobuf.lib

libglog.lib

gflags.lib

libopenblas.dll.a

hdf5.lib

hdf5_hl.lib

opencv_highgui2410.lib

opencv_core2410.lib

opencv_imgproc2410.lib

kernel32.lib

user32.lib

gdi32.lib

winspool.lib

comdlg32.lib

advapi32.lib

shell32.lib

ole32.lib

oleaut32.lib

uuid.lib

odbc32.lib

odbccp32.lib

即可配置好caffe_x64_release.props的属性表

调用caffe,输入prototxt和caffemodel文件,输出每个层的名字:

caffe_layer.h

#include<caffe/common.hpp>

#include<caffe/proto/caffe.pb.h>

#include<caffe/layers/batch_norm_layer.hpp>

#include<caffe/layers/bias_layer.hpp>

#include <caffe/layers/concat_layer.hpp>

#include <caffe/layers/conv_layer.hpp>

#include <caffe/layers/dropout_layer.hpp>

#include<caffe/layers/input_layer.hpp>

#include <caffe/layers/inner_product_layer.hpp>

#include "caffe/layers/lrn_layer.hpp"

#include <caffe/layers/pooling_layer.hpp>

#include <caffe/layers/relu_layer.hpp>

#include "caffe/layers/softmax_layer.hpp"

#include<caffe/layers/scale_layer.hpp>

#include<caffe/layers/prelu_layer.hpp>

namespace caffe

{

extern INSTANTIATE_CLASS(BatchNormLayer);

extern INSTANTIATE_CLASS(BiasLayer);

extern INSTANTIATE_CLASS(InputLayer);

extern INSTANTIATE_CLASS(InnerProductLayer);

extern INSTANTIATE_CLASS(DropoutLayer);

extern INSTANTIATE_CLASS(ConvolutionLayer);

REGISTER_LAYER_CLASS(Convolution);

extern INSTANTIATE_CLASS(ReLULayer);

REGISTER_LAYER_CLASS(ReLU);

extern INSTANTIATE_CLASS(PoolingLayer);

REGISTER_LAYER_CLASS(Pooling);

extern INSTANTIATE_CLASS(LRNLayer);

REGISTER_LAYER_CLASS(LRN);

extern INSTANTIATE_CLASS(SoftmaxLayer);

REGISTER_LAYER_CLASS(Softmax);

extern INSTANTIATE_CLASS(ScaleLayer);

extern INSTANTIATE_CLASS(ConcatLayer); extern INSTANTIATE_CLASS(PReLULayer);

}

main.cpp

#include<caffe.hpp>

#include <string>

#include <vector>

#include "caffe_layer.h"

using namespace caffe;

using namespace std;

int main() {

string net_file = "./infrared_mbfnet/antispoof-infrared.prototxt"; //prototxt文件

string weight_file = "./infrared_mbfnet/antispoof-infrared.caffemodel"; //caffemodel文件

Caffe::set_mode(Caffe::CPU);

//Caffe::SetDevice(0);

Phase phase = TEST;

boost::shared_ptr<Net<float>> net(new caffe::Net<float>(net_file, phase)); net->CopyTrainedLayersFrom(weight_file); vector<string> blob_names = net->blob_names(); for (int i = 0; i < blob_names.size(); i++){

cout << blob_names.at(i) << endl;

} system("pause");

return 0;

}

注意:若模型中用到了,prelu层,需要在caffe_layer.h中加入prelu_layer.hpp的头文件,并在代码中声明一下,extern INSTANTIATE_CLASS(PReLULayer),否则,会报错,找不到该层。

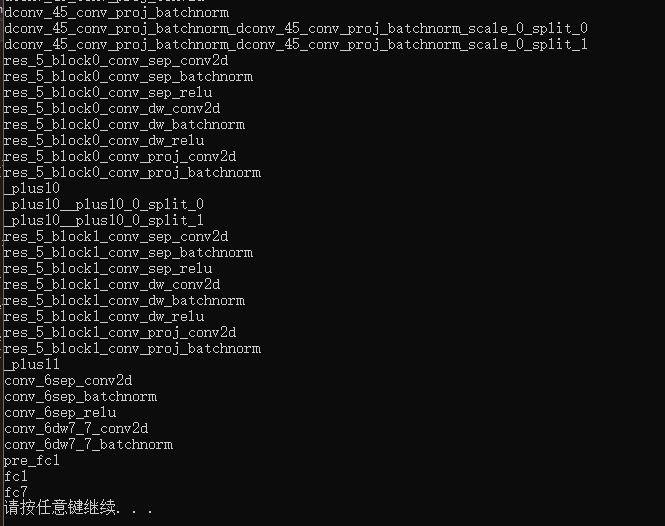

运行结果:

用c++调用caffe做前向:

在caffe目录中,./caffe-master/examples/cpp_classification/classification.cpp 将该文件添加到vs2013工程中,配置caffe_x64_release属性表

在原始的classification.cpp文件中,main函数的输入项有4个,model_file,trained_file,label_file,mean_file,其中mean_file是均值文件,存放了img.channels x height x width个float型的数,每个float数,是所有训练样本该位置的均值。在有些情况下,均值已经确定,imgdata=(src-127.5)*0.0078125,故可以将均值直接赋值给Classifier类的成员变量mean_。

void Classifier::SetMean1()

{

mean_ = cv::Mat(input_geometry_, CV_32FC3, cv::Scalar(127.5, 127.5, 127.5));

}

此时,main函数只需要输入3个参数,prototxt,caffemodel,label.txt文件。

该工程中包含两个文件,caffe_layer.h,classification.cpp

classification.cpp

#define USE_OPENCV 1

#include <caffe/caffe.hpp>

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#endif // USE_OPENCV

#include <algorithm>

#include <iosfwd>

#include <memory>

#include <string>

#include <utility>

#include <vector>

#include <fstream>

#include <time.h>

#include "caffe_layer.h" #ifdef USE_OPENCV

using namespace caffe; // NOLINT(build/namespaces)

using std::string;

using namespace cv; /* Pair (label, confidence) representing a prediction. */

typedef std::pair<string, float> Prediction; class Classifier {

public:

Classifier(const string& model_file,

const string& trained_file,

const string& label_file); std::vector<Prediction> Classify(const cv::Mat& img, int N = 5); int Classify1(const cv::Mat& img); private:

void SetMean(const string& mean_file);

void SetMean1(); std::vector<float> Predict(const cv::Mat& img); void WrapInputLayer(std::vector<cv::Mat>* input_channels); void Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels); private:

shared_ptr<Net<float> > net_;

cv::Size input_geometry_;

int num_channels_;

cv::Mat mean_;

std::vector<string> labels_;

}; Classifier::Classifier(const string& model_file,

const string& trained_file,

const string& label_file) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif /* Load the network. */

net_.reset(new Net<float>(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file); CHECK_EQ(net_->num_inputs(), 1) << "Network should have exactly one input.";

CHECK_EQ(net_->num_outputs(), 1) << "Network should have exactly one output."; Blob<float>* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

//std::cout << num_channels_ << std::endl;

CHECK(num_channels_ == 3 || num_channels_ == 1)

<< "Input layer should have 1 or 3 channels.";

input_geometry_ = cv::Size(input_layer->width(), input_layer->height()); /* Load the binaryproto mean file. */

//SetMean(mean_file);

SetMean1(); /* Load labels. */

std::ifstream labels(label_file.c_str());

CHECK(labels) << "Unable to open labels file " << label_file;

string line;

while (std::getline(labels, line))

labels_.push_back(string(line)); Blob<float>* output_layer = net_->output_blobs()[0];

CHECK_EQ(labels_.size(), output_layer->channels())

<< "Number of labels is different from the output layer dimension.";

} static bool PairCompare(const std::pair<float, int>& lhs,

const std::pair<float, int>& rhs) {

return lhs.first > rhs.first;

} /* Return the indices of the top N values of vector v. */

static std::vector<int> Argmax(const std::vector<float>& v, int N) {

std::vector<std::pair<float, int> > pairs;

for (size_t i = 0; i < v.size(); ++i)

pairs.push_back(std::make_pair(v[i], static_cast<int>(i)));

std::partial_sort(pairs.begin(), pairs.begin() + N, pairs.end(), PairCompare); std::vector<int> result;

for (int i = 0; i < N; ++i)

result.push_back(pairs[i].second);

return result;

} /* Return the top N predictions. */

std::vector<Prediction> Classifier::Classify(const cv::Mat& img, int N) {

std::vector<float> output = Predict(img); N = std::min<int>(labels_.size(), N);

std::vector<int> maxN = Argmax(output, N);

std::vector<Prediction> predictions;

for (int i = 0; i < N; ++i) {

int idx = maxN[i];

predictions.push_back(std::make_pair(labels_[idx], output[idx]));

} return predictions;

} int Classifier::Classify1(const cv::Mat& img) {

std::vector<float> output = Predict(img); float max = output[0];

int max_id = 0;

for (int i = 1; i < output.size(); i++)

{

if (output[i]>max)

max_id = i;

}

return max_id;

} /* Load the mean file in binaryproto format. */

void Classifier::SetMean(const string& mean_file) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto); /* Convert from BlobProto to Blob<float> */

Blob<float> mean_blob;

mean_blob.FromProto(blob_proto);

CHECK_EQ(mean_blob.channels(), num_channels_)

<< "Number of channels of mean file doesn't match input layer."; /* The format of the mean file is planar 32-bit float BGR or grayscale. */

std::vector<cv::Mat> channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

/* Extract an individual channel. */

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

} /* Merge the separate channels into a single image. */

cv::Mat mean;

cv::merge(channels, mean); /* Compute the global mean pixel value and create a mean image

* filled with this value. */

cv::Scalar channel_mean = cv::mean(mean);

mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

} void Classifier::SetMean1()

{

mean_ = cv::Mat(input_geometry_, CV_32FC3, cv::Scalar(127.5, 127.5, 127.5));

} std::vector<float> Classifier::Predict(const cv::Mat& img) {

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_,

input_geometry_.height, input_geometry_.width);

/* Forward dimension change to all layers. */

net_->Reshape(); std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels); Preprocess(img, &input_channels); net_->Forward(); /* Copy the output layer to a std::vector */

Blob<float>* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector<float>(begin, end);

} /* Wrap the input layer of the network in separate cv::Mat objects

* (one per channel). This way we save one memcpy operation and we

* don't need to rely on cudaMemcpy2D. The last preprocessing

* operation will write the separate channels directly to the input

* layer. */

void Classifier::WrapInputLayer(std::vector<cv::Mat>* input_channels) {

Blob<float>* input_layer = net_->input_blobs()[0]; int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

} void Classifier::Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels) {

/* Convert the input image to the input image format of the network. */

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_GRAY2BGR);

else

sample = img; cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample; cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1); cv::Mat sample_normalized;

cv::subtract(sample_float, mean_, sample_normalized); cv::Mat img_scale(cv::Size(img.cols, img.rows), CV_32FC3, cv::Scalar(0, 0, 0));

for (int i = 0; i < img_scale.rows; i++)

for (int j = 0; j < img_scale.cols; j++)

{

//cv::Vec3f *p = img_scale.ptr<Vec3f>(i, j);

if (sample_normalized.channels() == 3)

{

img_scale.at<Vec3f>(i, j)[0] = sample_normalized.at<Vec3f>(i, j)[0] * 0.0078125;

img_scale.at<Vec3f>(i, j)[1] = sample_normalized.at<Vec3f>(i, j)[1] * 0.0078125;

img_scale.at<Vec3f>(i, j)[2] = sample_normalized.at<Vec3f>(i, j)[2] * 0.0078125;

}

} /* This operation will write the separate BGR planes directly to the

* input layer of the network because it is wrapped by the cv::Mat

* objects in input_channels. */

cv::split(img_scale, *input_channels); CHECK(reinterpret_cast<float*>(input_channels->at(0).data)

== net_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

} int main(int argc, char** argv)

{ ::google::InitGoogleLogging(argv[0]); string model_file = "./infrared_mbfnet/antispoof-infrared.prototxt";

string trained_file = "./infrared_mbfnet/antispoof-infrared.caffemodel";

string label_file = "infrared_mbfnet/infrared_label.txt";

Classifier classifier(model_file, trained_file, label_file); string file = "img/0/outdoor_6_964.jpg"; std::cout << file << " : "; cv::Mat img = cv::imread(file, 1);

CHECK(!img.empty()) << "Unable to decode image " << file;

int predict_id = classifier.Classify1(img); std::cout << predict_id << std::endl; system("pause");

return 1;

} /*

int main(int argc, char** argv)

{ ::google::InitGoogleLogging(argv[0]); string model_file = "./infrared_mbfnet/antispoof-infrared.prototxt";

string trained_file = "./infrared_mbfnet/antispoof-infrared.caffemodel";

string label_file = "infrared_mbfnet/infrared_label.txt";

Classifier classifier(model_file, trained_file, label_file); //string file = "img/0/outdoor_6_964.jpg";

string txtpath = "infrared_test.txt";

fstream fin;

fin.open(txtpath, ios::in);

if (!fin.is_open())

{

return -1;

}

string line;

int total_num = 0;

int pos_num = 0;

while (!fin.eof())

{

total_num += 1;

fin >> line; size_t pos1 = line.rfind('/');

size_t pos2 = line.rfind('/', pos1-1);

string true_label = line.substr(pos2 + 1, pos1 - pos2 - 1); cv::Mat img = cv::imread(line, 1);

CHECK(!img.empty()) << "Unable to decode image " << line;

int predict_id = classifier.Classify1(img); std::cout <<line<<" : "<< predict_id << std::endl;

//std::cout << true_label << " " << predict_id << std::endl;

if (atoi(true_label.c_str()) == predict_id)

pos_num += 1;

} float accuracy = pos_num*1.0 / total_num;

std::cout << "accuracy:" << accuracy << std::endl; system("pause");

return 1;

}

*/ #else

int main(int argc, char** argv) {

LOG(FATAL) << "This example requires OpenCV; compile with USE_OPENCV.";

}

#endif // USE_OPENCV

若想将该inference的功能,集成在其他项目中,可将类的声明和类的实现,以及main函数分离:

caffe_layer.h(如上文所示)

classification.h

//#define USE_OPENCV 1

#include <caffe/caffe.hpp>

//#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

//#endif // USE_OPENCV

#include <algorithm>

#include <iosfwd>

#include <memory>

#include <string>

#include <utility>

#include <vector>

#include <fstream>

#include <time.h> //#ifdef USE_OPENCV

using namespace caffe; // NOLINT(build/namespaces)

using std::string;

using namespace cv; /* Pair (label, confidence) representing a prediction. */

typedef std::pair<string, float> Prediction; class Classifier {

public:

Classifier(const string& model_file,

const string& trained_file,

const string& label_file); std::vector<Prediction> Classify(const cv::Mat& img, int N = 5); int Classify1(const cv::Mat& img); private:

void SetMean(const string& mean_file);

void SetMean1(); std::vector<float> Predict(const cv::Mat& img); void WrapInputLayer(std::vector<cv::Mat>* input_channels); void Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels); private:

shared_ptr<Net<float> > net_;

cv::Size input_geometry_;

int num_channels_;

cv::Mat mean_;

std::vector<string> labels_;

};

classficaion.cpp

#include "classification.h" Classifier::Classifier(const string& model_file,

const string& trained_file,

const string& label_file) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif /* Load the network. */

net_.reset(new Net<float>(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file); CHECK_EQ(net_->num_inputs(), 1) << "Network should have exactly one input.";

CHECK_EQ(net_->num_outputs(), 1) << "Network should have exactly one output."; Blob<float>* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

//std::cout << num_channels_ << std::endl;

CHECK(num_channels_ == 3 || num_channels_ == 1)

<< "Input layer should have 1 or 3 channels.";

input_geometry_ = cv::Size(input_layer->width(), input_layer->height()); /* Load the binaryproto mean file. */

//SetMean(mean_file);

SetMean1(); /* Load labels. */

std::ifstream labels(label_file.c_str());

CHECK(labels) << "Unable to open labels file " << label_file;

string line;

while (std::getline(labels, line))

labels_.push_back(string(line)); Blob<float>* output_layer = net_->output_blobs()[0];

CHECK_EQ(labels_.size(), output_layer->channels())

<< "Number of labels is different from the output layer dimension.";

} static bool PairCompare(const std::pair<float, int>& lhs,

const std::pair<float, int>& rhs) {

return lhs.first > rhs.first;

} /* Return the indices of the top N values of vector v. */

static std::vector<int> Argmax(const std::vector<float>& v, int N) {

std::vector<std::pair<float, int> > pairs;

for (size_t i = 0; i < v.size(); ++i)

pairs.push_back(std::make_pair(v[i], static_cast<int>(i)));

std::partial_sort(pairs.begin(), pairs.begin() + N, pairs.end(), PairCompare); std::vector<int> result;

for (int i = 0; i < N; ++i)

result.push_back(pairs[i].second);

return result;

} /* Return the top N predictions. */

std::vector<Prediction> Classifier::Classify(const cv::Mat& img, int N) {

std::vector<float> output = Predict(img); N = std::min<int>(labels_.size(), N);

std::vector<int> maxN = Argmax(output, N);

std::vector<Prediction> predictions;

for (int i = 0; i < N; ++i) {

int idx = maxN[i];

predictions.push_back(std::make_pair(labels_[idx], output[idx]));

} return predictions;

} int Classifier::Classify1(const cv::Mat& img) {

std::vector<float> output = Predict(img); float max = output[0];

int max_id = 0;

for (int i = 1; i < output.size(); i++)

{

if (output[i]>max)

max_id = i;

}

return max_id;

} /* Load the mean file in binaryproto format. */

void Classifier::SetMean(const string& mean_file) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto); /* Convert from BlobProto to Blob<float> */

Blob<float> mean_blob;

mean_blob.FromProto(blob_proto);

CHECK_EQ(mean_blob.channels(), num_channels_)

<< "Number of channels of mean file doesn't match input layer."; /* The format of the mean file is planar 32-bit float BGR or grayscale. */

std::vector<cv::Mat> channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

/* Extract an individual channel. */

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

} /* Merge the separate channels into a single image. */

cv::Mat mean;

cv::merge(channels, mean); /* Compute the global mean pixel value and create a mean image

* filled with this value. */

cv::Scalar channel_mean = cv::mean(mean);

mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

} void Classifier::SetMean1()

{

mean_ = cv::Mat(input_geometry_, CV_32FC3, cv::Scalar(127.5, 127.5, 127.5));

} std::vector<float> Classifier::Predict(const cv::Mat& img) {

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_,

input_geometry_.height, input_geometry_.width);

/* Forward dimension change to all layers. */

net_->Reshape(); std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels); Preprocess(img, &input_channels); net_->Forward(); /* Copy the output layer to a std::vector */

Blob<float>* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector<float>(begin, end);

} /* Wrap the input layer of the network in separate cv::Mat objects

* (one per channel). This way we save one memcpy operation and we

* don't need to rely on cudaMemcpy2D. The last preprocessing

* operation will write the separate channels directly to the input

* layer. */

void Classifier::WrapInputLayer(std::vector<cv::Mat>* input_channels) {

Blob<float>* input_layer = net_->input_blobs()[0]; int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

} void Classifier::Preprocess(const cv::Mat& img,

std::vector<cv::Mat>* input_channels) {

/* Convert the input image to the input image format of the network. */

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_GRAY2BGR);

else

sample = img; cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample; cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1); cv::Mat sample_normalized;

cv::subtract(sample_float, mean_, sample_normalized); cv::Mat img_scale(cv::Size(img.cols, img.rows), CV_32FC3, cv::Scalar(0, 0, 0));

for (int i = 0; i < img_scale.rows; i++)

for (int j = 0; j < img_scale.cols; j++)

{

//cv::Vec3f *p = img_scale.ptr<Vec3f>(i, j);

if (sample_normalized.channels() == 3)

{

img_scale.at<Vec3f>(i, j)[0] = sample_normalized.at<Vec3f>(i, j)[0] * 0.0078125;

img_scale.at<Vec3f>(i, j)[1] = sample_normalized.at<Vec3f>(i, j)[1] * 0.0078125;

img_scale.at<Vec3f>(i, j)[2] = sample_normalized.at<Vec3f>(i, j)[2] * 0.0078125;

}

} /* This operation will write the separate BGR planes directly to the

* input layer of the network because it is wrapped by the cv::Mat

* objects in input_channels. */

cv::split(img_scale, *input_channels); CHECK(reinterpret_cast<float*>(input_channels->at(0).data)

== net_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

} //#else

//int main(int argc, char** argv) {

// LOG(FATAL) << "This example requires OpenCV; compile with USE_OPENCV.";

//}

//#endif // USE_OPENCV

main.cpp

#include "classification.h"

#include "caffe_layer.h" int main(int argc, char** argv)

{ ::google::InitGoogleLogging(argv[0]); string model_file = "./infrared_mbfnet/antispoof-infrared.prototxt";

string trained_file = "./infrared_mbfnet/antispoof-infrared.caffemodel";

string label_file = "infrared_mbfnet/infrared_label.txt";

Classifier classifier(model_file, trained_file, label_file); string file = "img/0/outdoor_6_964.jpg"; std::cout << file << " : "; cv::Mat img = cv::imread(file, 1);

CHECK(!img.empty()) << "Unable to decode image " << file;

int predict_id = classifier.Classify1(img); std::cout << predict_id << std::endl; system("pause");

return 1;

} /*

int main(int argc, char** argv)

{ ::google::InitGoogleLogging(argv[0]); string model_file = "./infrared_mbfnet/antispoof-infrared.prototxt";

string trained_file = "./infrared_mbfnet/antispoof-infrared.caffemodel";

string label_file = "infrared_mbfnet/infrared_label.txt";

Classifier classifier(model_file, trained_file, label_file); //string file = "img/0/outdoor_6_964.jpg";

string txtpath = "infrared_test.txt";

fstream fin;

fin.open(txtpath, ios::in);

if (!fin.is_open())

{

return -1;

}

string line;

int total_num = 0;

int pos_num = 0;

while (!fin.eof())

{

total_num += 1;

fin >> line; size_t pos1 = line.rfind('/');

size_t pos2 = line.rfind('/', pos1-1);

string true_label = line.substr(pos2 + 1, pos1 - pos2 - 1); cv::Mat img = cv::imread(line, 1);

CHECK(!img.empty()) << "Unable to decode image " << line;

int predict_id = classifier.Classify1(img); std::cout <<line<<" : "<< predict_id << std::endl;

//std::cout << true_label << " " << predict_id << std::endl;

if (atoi(true_label.c_str()) == predict_id)

pos_num += 1;

} float accuracy = pos_num*1.0 / total_num;

std::cout << "accuracy:" << accuracy << std::endl; system("pause");

return 1;

}

*/

注意:该工程是在vs2013下的release模式下调用,用vs2013的原因,是因为NugetPackages中仅有vs2013的lib和dll库,release的原因,因为caffe是在release模式下编译的,debug模式下没有试过。

最新文章

- php大力力 [055节] 大力力阅读文章集锦

- Goodchild教授关于GIS的四大预测的不同看法

- Linux配置防火墙 开启80端口

- 第六章_PHP数组(二)

- Android sqlite3工具的使用

- AVOIR发票的三种作用

- C++小技巧之四舍五入与保留小数

- Git使用之基于SSH的Gitserver的client配置(下篇)

- 利用h5标签在网页上播放音乐

- git遇到的问题解决方案

- css实现多行多列的布局

- csrf

- Unity 图形处理(切分与拉伸)

- Ajax和Json实现自动补全

- opencv利用Cascade Classifier训练人脸检测器

- Install Apache Maven on Ubuntu

- Junit3和Junit4使用区别

- MySQL移动数据目录出现权限问题

- 导入jar包和创建jar文件

- BZOJ4755: [JSOI2016]扭动的回文串——题解

热门文章

- xamarin.Android开发前的配置

- PHP----------PHP自身的性能优化注意事项

- C++---使用VS在C++编程中出现 fatal error C1010: 在查找预编译头时遇到意外的文件结尾。是否忘记了向源中添加“#include "stdafx.h"”?

- NuGet的简单使用

- 【论文速读】Shangbang Long_ECCV2018_TextSnake_A Flexible Representation for Detecting Text of Arbitrary Shapes

- 群晖IP地址更新问题

- 阿里云新老用户购买 2核8G云服务器5M带宽

- laravel5.7 前后端分离开发 实现基于API请求的token认证

- vue.js使用vue-preview做移动端缩略图时报错Property or method "$preview" is not defined

- springboot整合mybatis(使用MyBatis Generator)