在Eclipse上运行Spark(Standalone,Yarn-Client)

欢迎转载,且请注明出处,在文章页面明显位置给出原文连接。

原文链接:http://www.cnblogs.com/zdfjf/p/5175566.html

我们知道有eclipse的Hadoop插件,能够在eclipse上操作hdfs上的文件和新建mapreduce程序,以及以Run On Hadoop方式运行程序。那么我们可不可以直接在eclipse上运行Spark程序,提交到集群上以YARN-Client方式运行,或者以Standalone方式运行呢?

答案是可以的。下面我来介绍一下如何在eclipse上运行Spark的wordcount程序。我用的hadoop 版本为2.6.2,spark版本为1.5.2。

1.Standalone方式运行

1.1 新建一个普通的java工程即可,下面直接上代码,

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ package com.frank.spark; import scala.Tuple2;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction; import java.util.Arrays;

import java.util.List;

import java.util.regex.Pattern; public final class JavaWordCount {

private static final Pattern SPACE = Pattern.compile(" "); public static void main(String[] args) throws Exception { if (args.length < 1) {

System.err.println("Usage: JavaWordCount <file>");

System.exit(1);

} SparkConf sparkConf = new SparkConf().setAppName("JavaWordCount");

sparkConf.setMaster("spark://192.168.0.1:7077");

JavaSparkContext ctx = new JavaSparkContext(sparkConf);

ctx.addJar("C:\\Users\\Frank\\sparkwordcount.jar");

JavaRDD<String> lines = ctx.textFile(args[0], 1); JavaRDD<String> words = lines.flatMap(new FlatMapFunction<String, String>() {

@Override

public Iterable<String> call(String s) {

return Arrays.asList(SPACE.split(s));

}

}); JavaPairRDD<String, Integer> ones = words.mapToPair(new PairFunction<String, String, Integer>() {

@Override

public Tuple2<String, Integer> call(String s) {

return new Tuple2<String, Integer>(s, 1);

}

}); JavaPairRDD<String, Integer> counts = ones.reduceByKey(new Function2<Integer, Integer, Integer>() {

@Override

public Integer call(Integer i1, Integer i2) {

return i1 + i2;

}

}); List<Tuple2<String, Integer>> output = counts.collect();

for (Tuple2<?,?> tuple : output) {

System.out.println(tuple._1() + ": " + tuple._2());

}

ctx.stop();

}

}

代码直接从spark安装包解压后在examples/src/main/java/org/apache/spark/examples/JavaWordCount.java拷贝出来,唯一不同的地方在增加了44行和46行,44行设置了Master,为hadoop的master 结点的IP,端口号为7077。46行设置了工程打包后放置在windows上的路径。

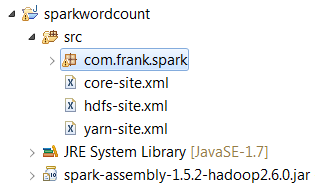

1.2 加入spark依赖包spark-assembly-1.5.2-hadoop2.6.0.jar,这个包可以从spark 安装包解压 后在lib目录下。

1.3 配置要统计的文件在hdfs上的路径

Run As->Run Configurations

点击Arguments,因为程序中47行要求输入被统计的文件路径,所以在这里配置以下,文件必须放在hdfs上,所以这里的ip也是你的hadoop的master机器的ip.

1.4 接下来就是Run程序了,统计的结果会显示在eclipse的控制台。你也可以通过spark的web页面查看刚才提交的程序。

2. 以YARN-Client方式运行

2.1 先上代码

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ package com.frank.spark; import scala.Tuple2;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction; import java.util.Arrays;

import java.util.List;

import java.util.regex.Pattern; public final class JavaWordCount {

private static final Pattern SPACE = Pattern.compile(" "); public static void main(String[] args) throws Exception { 38 System.setProperty("HADOOP_USER_NAME", "hadoop"); if (args.length < 1) {

System.err.println("Usage: JavaWordCount <file>");

System.exit(1);

} SparkConf sparkConf = new SparkConf().setAppName("JavaWordCountByFrank01");

sparkConf.setMaster("yarn-client");

sparkConf.set("spark.yarn.dist.files", "C:\\software\\workspace\\sparkwordcount\\src\\yarn-site.xml");

sparkConf.set("spark.yarn.jar", "hdfs://192.168.0.1:9000/user/hadoop/spark-assembly-1.5.2-hadoop2.6.0.jar"); JavaSparkContext ctx = new JavaSparkContext(sparkConf);

ctx.addJar("C:\\Users\\Frank\\sparkwordcount.jar");

JavaRDD<String> lines = ctx.textFile(args[0], 1); JavaRDD<String> words = lines.flatMap(new FlatMapFunction<String, String>() {

@Override

public Iterable<String> call(String s) {

return Arrays.asList(SPACE.split(s));

}

}); JavaPairRDD<String, Integer> ones = words.mapToPair(new PairFunction<String, String, Integer>() {

@Override

public Tuple2<String, Integer> call(String s) {

return new Tuple2<String, Integer>(s, 1);

}

}); JavaPairRDD<String, Integer> counts = ones.reduceByKey(new Function2<Integer, Integer, Integer>() {

@Override

public Integer call(Integer i1, Integer i2) {

return i1 + i2;

}

}); List<Tuple2<String, Integer>> output = counts.collect();

for (Tuple2<?,?> tuple : output) {

System.out.println(tuple._1() + ": " + tuple._2());

}

ctx.stop();

}

}2.2 程序解释

38行,如果你的windows用户名和集群上用户名不一样,这里就应该配置一下。比如我windows用户名为Frank,而装有hadoop的集群username为hadoop,这里我就以38行这样设置。

46行,这里配置以yarn-client方式

48行,以这种方式运行时候,每一次运行都会把spark-assembly-1.5.2-hadoop2.6.0.jar包上传到hdfs下这次生成的application-id文件夹下,会耗费几分钟时间,这里也可以配置spark.yarn.jar,先把spark-assembly-1.5.2-hadoop2.6.0.jar上传到hdfs一个目录下,这样就不用每次从windows上传到hdfs下了。参考https://spark.apache.org/docs/1.5.2/running-on-yarn.html

spark.yarn.jar :The location of the Spark jar file, in case overriding the default location is desired. By default, Spark on YARN will use a Spark jar installed locally, but the Spark jar can also be in a world-readable location on HDFS. This allows YARN to cache it on nodes so that it doesn't need to be distributed each time an application runs. To point to a jar on HDFS, for example, set this configuration to "hdfs:///some/path".

51行,把项目打包后放在windows上的路径。

2.3 程序配置

把3个配置文件放在src下,配置文件从hadoop的linux机器上拷贝下来。

2.4 配置要统计的文件在hdfs上的路径

参考1.3,同样结果显示在eclipse控制台。

最新文章

- java---构造器

- 为什么Pojo类没有注解也没有spring中配置<bean>也能够被加载到容器中。

- 如何在HTML5 Canvas 里面显示 Font Awesome 图标

- 《UML大战需求分析》阅读笔记6

- Python自动化运维工具fabric的安装

- 《高性能JavaScript》笔记

- bootStrap-2

- java 日期格式转换EEE MMM dd HH:mm:ss z yyyy

- 在这里总结一些iOS开发中的小技巧,能大大方便我们的开发,持续更新。

- 1972: [Sdoi2010]猪国杀 - BZOJ

- ssh添加公钥

- Struts2 一、 视图转发跳转

- iOS原生refresh(UIRefreshControl)

- (转)Hadoop MapReduce链式实践--ChainReducer

- 浅谈分析表格布局与Div+CSS布局的区别

- 《3》CentOS7.0+OpenStack+kvm云平台部署—配置Glance

- 使用Unity NGUI-InputField组件输入时发现显示为白色就是看不到字体

- Android之应用市场排行榜、上架、首发

- p1457 The Castle

- thrift系列 - 快速入门