GBDT,随机森林

2024-08-26 05:57:21

author:yangjing

time:2018-10-22

Gradient boosting decision tree

1.main diea

The main idea behind GBDT is to combine many simple models(also known as week kernels),like shallow trees.Each tree can only provide good predictions on part of the data,and so more and more trees are added to iteratively improve performance.

2.parameters setting

the algorithm is a bit more sensitive to parameter settings than random forests,but can provide better accuracy if the parameters are set correctly.

- number of trees

By increasing n_estimators ,also increasing the model complexity,as the model has more chances to correct misticks on the training set. - learning rate

controns how strongly each tree tries to correct the misticks of the previous trees.A higher learning rate means each tree can make stronger correctinos,allowing for more complex models. - max_depth

or alternatively max_leaf_nodes.Usyally max_depth is set very low for gradient-boosted models,often not deeper than five splits.

3.code

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_breast_cancer

cancer=load_breast_cancer()

X_train,X_test,y_train,y_test=train_test_split(cancer.data,cancer.target,random_state=0)

gbrt=GradientBoostingClassifier(random_state=0)

gbrt.fit(X_train,y_train)

gbrt.score(X_test,y_test)

In [261]: X_train,X_test,y_train,y_test=train_test_split(cancer.data,cancer.target,random_state=0)

...: gbrt=GradientBoostingClassifier(random_state=0)

...: gbrt.fit(X_train,y_train)

...: gbrt.score(X_test,y_test)

...:

Out[261]: 0.958041958041958

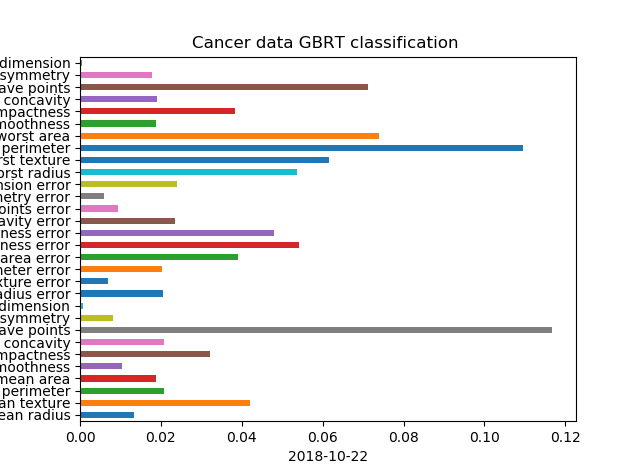

In [262]: gbrt.feature_importances_

Out[262]:

array([0.01337291, 0.04201687, 0.0208666 , 0.01889077, 0.01028091,

0.03215986, 0.02074619, 0.11678956, 0.00820024, 0.00074312,

0.02042134, 0.00680047, 0.02023052, 0.03907398, 0.05406751,

0.04795741, 0.02358101, 0.00934718, 0.00593481, 0.0239241 ,

0.05354265, 0.06160083, 0.10961728, 0.07395201, 0.01867851,

0.03842953, 0.01915824, 0.07128703, 0.01773659, 0.00059199])

In [263]: gbrt.learning_rate

Out[263]: 0.1

In [264]: gbrt.max_depth

Out[264]: 3

In [265]: len(gbrt.estimators_)

Out[266]: 100

In [272]: gbrt.get_params()

Out[272]:

{'criterion': 'friedman_mse',

'init': None,

'learning_rate': 0.1,

'loss': 'deviance',

'max_depth': 3,

'max_features': None,

'max_leaf_nodes': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 1,

'min_samples_split': 2,

'min_weight_fraction_leaf': 0.0,

'n_estimators': 100,

'presort': 'auto',

'random_state': 0,

'subsample': 1.0,

'verbose': 0,

'warm_start': False}

Random forest

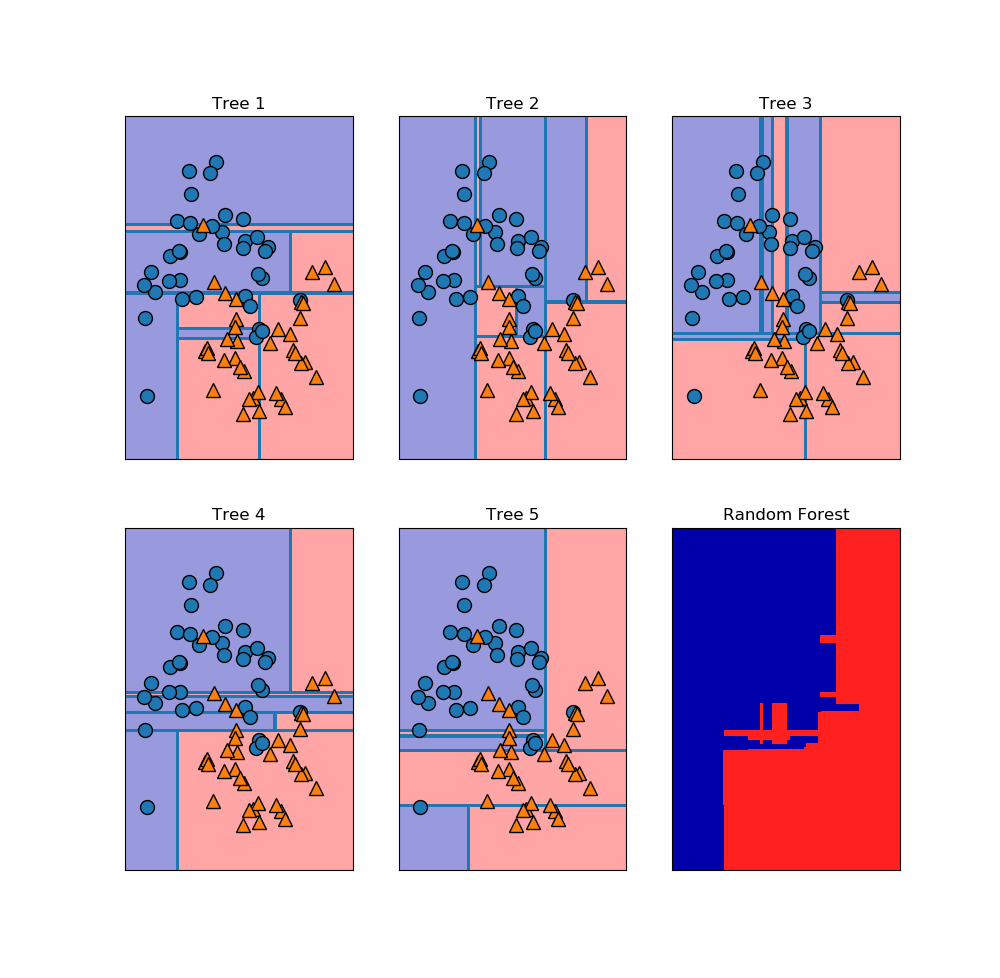

In [230]: y

Out[230]:

array([1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0,

0, 0, 1, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0,

1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 1,

0, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 0, 0, 0, 0,

0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 0], dtype=int64)

In [231]: axes.ravel()

Out[231]:

array([<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46F3694A8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46C099F28>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46E6E3BE0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46BEB72E8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46ED67198>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001F46F292C88>],

dtype=object)

In [232]: from sklearn.model_selection import train_test_split

In [233]: X_trai,X_test,y_train,y_test=train_test_split(X,y,stratify=y,random_state=42)

In [234]: len(X_trai)

Out[234]: 75

In [235]: fores=RandomForestClassifier(n_estimators=5,random_state=2)

In [236]: fores.fit(X_trai,y_train)

Out[236]:

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=5, n_jobs=1,

oob_score=False, random_state=2, verbose=0, warm_start=False)

In [237]: fores.score(X_test,y_test)

Out[237]: 0.92

In [238]: fores.estimators_

Out[238]:

[DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=1872583848, splitter='best'),

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=794921487, splitter='best'),

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=111352301, splitter='best'),

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=1853453896, splitter='best'),

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=213298710, splitter='best')]

最新文章

- Java程序员应该了解的10个面向对象设计原则

- git 出错误“值对于Uint32太大或太小”

- Yaf框架下类的自动加载

- ADO.NET笔记20160322

- SAE上传web应用(包括使用数据库)教程详解及问题解惑

- 夺命雷公狗—angularjs—12—get参数的接收

- TDirectory.Delete 创建删除目录简单示例

- SQL Server 系统时间

- Tomcat Remote Debug操作和原理

- 单行 JS 实现移动端金钱格式的输入规则

- 奶瓶beini系统

- [Swift]LeetCode1034.边框着色 | Coloring A Border

- Linux下怎样搜索文件

- 20145215《网络对抗》Exp6 信息搜集与漏洞扫描

- 解决IntelliJ IDEA无法读取配置*.properties文件的问题

- Faster RCNN原理分析(二):Region Proposal Networks详解

- 20155209 林虹宇 Exp 8 Web基础

- HDU 6070 二分+线段树

- 【Android】在build/envsetup.sh中添加自己的命令(函数)

- Git查看、删除远程分支和tag

热门文章

- 解决windows下文件拷贝到ubuntu下文件名乱码的问题

- Dfs【p4306(bzoj 2208)】 [JSOI2010]连通数

- IntelliJ IDEA Mac破解教程

- NOIP 2015 跳石头

- 【二分答案】Codeforces Round #402 (Div. 2) D. String Game

- 【树上莫队】【带修莫队】【权值分块】bzoj1146 [CTSC2008]网络管理Network

- 【二分答案】【分块答案】【字符串哈希】【set】bzoj2946 [Poi2000]公共串

- python3开发进阶-Django框架学习前的小项目(一个简单的学员管理系统)

- scope的范围

- 一良心操盘手:我们是这样玩死散户的! z