DOCKER学习_004:Docker网络

一 简介

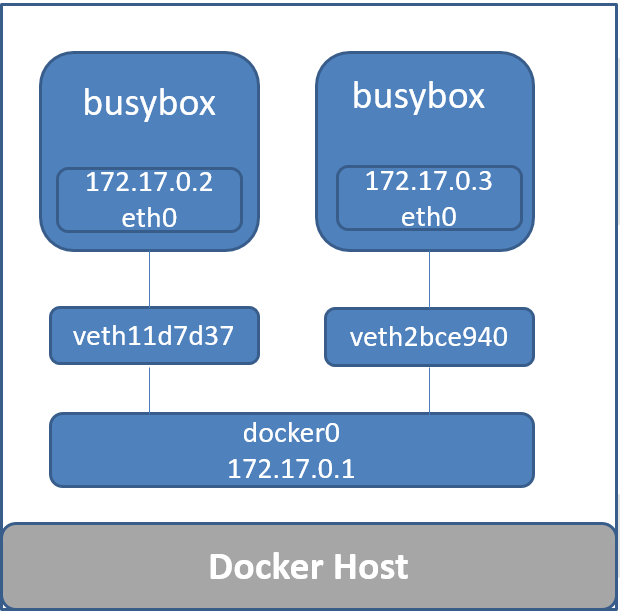

当Docker进程启动时,会在主机上创建一个名为docker0的虚拟网桥,此主机上启动的docker容器会连接到这个虚拟网桥上。虚拟网桥的工作方式和物理交换机类似,这样主机上的所有容器就通过交换机连接在了一个二层网络中。从docker0子网中分配一个ip给容器使用,并设置docker0的ip地址为容器的默认网关。在主机上创建一对虚拟网卡veth pair设备,docker将veth pair设备的一端放在新创建的容器中,并命名为eth0(容器的网卡),另一端放在主机中,以vethxxx这样类似的名字命名,并将这个网络设备加入到docker0网桥中。可以通过brctl show命令查看。而这种网络模式即称之为bridge网络模式。

除了bridge模式以外,docker原生网络,还支持另外两种模式: none和host

1.1 查看docker的网络

[root@docker-server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

01dcd6c7d8fa bridge bridge local

6505e9ea7ccb host host local

7ab639028591 none null local

删除所有容器

[root@docker-server1 data]# docker ps -qa |xargs docker rm -f

354fe8e46850

2fed9f37d75c

3b821b608a83

993ed9339059

9cd7c00cbfd7

e0ed338e565a

d5fe36b5a71a

b3dc7c8b56cd

a8ec13770dc0

e943751b7ce9

4ee7b1a64be5

a306908b2740

1.2本地的网卡信息

[root@docker-server1 data]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP group default qlen

link/ether :0c:::dd: brd ff:ff:ff:ff:ff:ff

inet 192.168.132.131/ brd 192.168.132.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bcf9:af19:a325:e2c7/ scope link noprefixroute

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default #自己生成的容器网桥

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/ brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

1.3 创建一个容器,查看容器网卡

[root@docker-server1 ~]# docker run -it busybox /bin/sh

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

0f8c40e1270f: Pull complete

Digest: sha256:1303dbf110c57f3edf68d9f5a16c082ec06c4cf7604831669faf2c712260b5a0

Status: Downloaded newer image for busybox:latest

/ # ip a

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if79: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/ brd 172.17.255.255 scope global eth0 #网卡地址

valid_lft forever preferred_lft forever

/ # ip route

default via 172.17.0.1 dev eth0

172.17.0.0/ dev eth0 scope link src 172.17.0.2

1.4 宿主机变化

[root@docker-server1 ~]# ip addr

: veth5e51ab1@if78: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master docker0 state UP group default

link/ether ::f9:c9::8b brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80:::f9ff:fec9:798b/ scope link

valid_lft forever preferred_lft forever

两块网卡使用桥接模式连接

二 Docker的bridge网络

网络结构示意图:

2.1 修改docker的默认网络

[root@docker-server1 ~]# cat /etc/docker/daemon.json

{

"log-driver": "journald",

"bip":"192.168.0.1/24"

}

[root@docker-server1 ~]# systemctl restart docker

[root@docker-server1 ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP group default qlen

link/ether :0c:::dd: brd ff:ff:ff:ff:ff:ff

inet 192.168.132.131/ brd 192.168.132.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bcf9:af19:a325:e2c7/ scope link noprefixroute

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/ brd 192.168.0.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

[root@docker-server1 ~]# docker run -it busybox /bin/sh

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if83: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.2/ brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

再看宿主机

[root@docker-server1 ~]# ip addr

: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue state UP group default

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/ brd 192.168.0.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

: veth6000535@if82: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue master docker0 state UP group default

link/ether e6:4f:5f::f6: brd ff:ff:ff:ff:ff:ff link-netnsid

inet6 fe80::e44f:5fff:fe61:f609/ scope link

valid_lft forever preferred_lft forever

在另一个机器上

2.2 验证bridge网络

开启两个容器,使用192.168.0.0/24网段

[root@docker-server2 ~]# docker run -it centos /bin/bash

[root@2d28d8051991 /]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue state UP group default

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff link-netnsid

inet 192.168.0.2/ brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

开另一个容器

[root@docker-server2 ~]# docker run -it centos /bin/bash

[root@73a145861fe5 /]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue state UP group default

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff link-netnsid

inet 192.168.0.3/ brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

同一个主机的两个容器可以互通

[root@2d28d8051991 /]# ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) () bytes of data.

bytes from 192.168.0.3: icmp_seq= ttl= time=0.218 ms

bytes from 192.168.0.3: icmp_seq= ttl= time=0.054 ms

bytes from 192.168.0.3: icmp_seq= ttl= time=0.064 ms

bytes from 192.168.0.3: icmp_seq= ttl= time=0.078 ms

从另一个主机的容器ping

/ # ping 192.168.0.3 #无法ping通

PING 192.168.0.3 (192.168.0.3): data bytes

容器通向外部

[root@docker-server1 ~]# docker exec -it c39a503abfae /bin/sh

/ # ping www.baidu.com

PING www.baidu.com (103.235.46.39): data bytes

bytes from 103.235.46.39: seq= ttl= time=1252.980 ms

bytes from 103.235.46.39: seq= ttl= time=249.676 ms

bytes from 103.235.46.39: seq= ttl= time=252.693 ms

bytes from 103.235.46.39: seq= ttl= time=306.417 ms

/ # cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 8.8.8.8

和外部的DNS一样

查看bridge网络信息:

# 通过如下命令会列出bridge网络的相关信息,其中"Containers"字段的表示是信息是指当前节点上有哪些容器使用了该网络

[root@docker-server1 ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "4cac3a734539ba0178f382df11101c40477b9a6714c090a07bdb3278bd47524f",

"Created": "2019-11-09T10:19:59.805931858-05:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "192.168.0.1/24",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"c39a503abfaeb613551febf4a191d73cab26f381cfba2b972e074c93fc35c0a9": {

"Name": "busy_cannon",

"EndpointID": "21f2ff7ef65e69117d94a3d80dddde25ee334f12482a866d85e4cf56d7287dbb",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/24",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": ""

},

"Labels": {}

}

]

2.4 创建使用bridge网络容器的示例

[root@docker-server1 ~]# docker run -d --name web1 --net bridge nginx

42c2e52a339b76f44ba912888863810b4b7aaf379a8b5881ca0f4f4cee8622d0

[root@docker-server1 ~]# iptables -t nat -L

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- anywhere anywhere ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT)

target prot opt source destination Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER all -- anywhere !loopback/ ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 192.168.0.0/ anywhere Chain DOCKER ( references)

target prot opt source destination

RETURN all -- anywhere anywhere

2.5 基于bridge网络的容器访问外部网络

默认情况下,基于bridge网络容器即可访问外部网络,这是因为默认情况下,docker使用了iptables的snat转发来实现容器对外部的访问(需要内核开启net.ipv4.ip_forward=1)

外部网络访问基于bridge网络的容器

如果想让外界可以访问到基于bridge网络创建的容器提供的服务,则必须要告诉docker要使用的端口。

可以通过如下方法查看镜像会使用哪些端口:

[root@docker-server1 ~]# docker inspect nginx | jq .[]."ContainerConfig"."ExposedPorts"

{

"80/tcp": {}

}

在创建容器的时候可以指定这个容器的端口与主机端口的映射关系:

-p: 可以指定主机与容器的端口关系,冒号左边是主机的端口,右边是映射到容器中的端口

-P:该参数会分配镜像中所有的会使用的端口,并映射到主机上的随机端口

这种端口映射基于iptables的dnat实现

查看容器的端口情况:

[root@docker-server1 ~]# docker port 1f980072d2cf

/tcp -> 0.0.0.0:

如果创建容器时,-p参数后面只一个指定端口,意思是主机会随机一个端口,映射到容器的该指定端口:

下面是一个基于端口映射的示例:

[root@docker-server1 ~]# docker run -d --dns 8.8.8.8 -p 8080:80 -p 2022:22 --name webserver1 httpd:2.4

d386c0b3218db97fe54baf6e03b7f2545aca2108318b87b851a10b8b49b04a6e

[root@docker-server1 ~]# docker run -d --dns 8.8.8.8 -P --name webserver2 httpd:2.4

565f610a0e6a1b09799ee5f85fd7ec8490d320560d212c7b2f488529d63801a5

三 none网络

故名思议,none网络就是什么都没有的网络。使用none网络的容器除了lo,没有其他任何网卡,完全隔离。用于既不需要访问外部服务,也不允许外部服务访问自己的应用场景。

3.1 查看none网络信息

[root@docker-server1 ~]# docker network inspect none

[

{

"Name": "none",

"Id": "7ab6390285910ad89e0bbfaf857504d455609bd39eb68b39b59cea1b0b2a10b0",

"Created": "2019-11-09T02:36:44.439087426-05:00",

"Scope": "local",

"Driver": "null",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

3.2 创建使用none网络容器的示例

[root@docker-server1 ~]# docker run -it --net none busybox

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

/ # ping 127.0.0.1

PING 127.0.0.1 (127.0.0.1): data bytes

bytes from 127.0.0.1: seq= ttl= time=0.052 ms

bytes from 127.0.0.1: seq= ttl= time=0.100 ms/ # ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3): data bytes

ping: sendto: Network is unreachable

/ # ping 192.168.0.1

PING 192.168.0.1 (192.168.0.1): data bytes

ping: sendto: Network is unreachable

四 host网络

使用host网络的主机,与宿主机共享网络地址,可以获得最好的数据转发性能。缺点是,同一个宿主机上的多个容器共享同一个ip地址,如果多容器使用相同的端口,需要自行解决端口冲突问题。

同样的,可以通过如下方式

4.1 查看host网络信息

[root@docker-server1 ~]# docker network inspect host

[

{

"Name": "host",

"Id": "6505e9ea7ccb39a251ec7ec80fe6198d874e24aff60bc036fde4ac9777aeca8c",

"Created": "2019-11-09T02:36:44.449893094-05:00",

"Scope": "local",

"Driver": "host",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

4.2 创建一个使用host网络容器的示例

# 可以看到该容器没有自己的IP地址,因为它直接使用宿主机IP地址

[root@docker-server1 ~]# docker run -it --net host busybox

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast qlen

link/ether :0c:::dd: brd ff:ff:ff:ff:ff:ff

inet 192.168.132.131/ brd 192.168.132.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::bcf9:af19:a325:e2c7/ scope link

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/ brd 192.168.0.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

容器的网络和宿主机的网络一模一样

缺点是无法启动两个一样具有应用的容器,因为一个容器启动之后,占用一个端口,新的容器就无法在使用这个端口

以nginx为例

[root@docker-server1 ~]# docker run -d --net host nginx

[root@docker-server1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d5ce7b93140d nginx "nginx -g 'daemon of…" seconds ago Up seconds charming_nightingale

再启动一个

[root@docker-server1 ~]# docker run -d --net host nginx

[root@docker-server1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

30255c80abb9 nginx "nginx -g 'daemon of…" second ago Up second infallible_tu

[root@docker-server1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

30255c80abb9 nginx "nginx -g 'daemon of…" seconds ago Exited () Less than a second ago infallible_tu

五 自定义网络

Docker除了提供三种的默认网络模式之外,也允许用户针对一些特定的应用场景去创建一些自定义的网络。这样属于这个网络的容器就可以单独隔离出来,它们之间可以相互通信,而不在这个网络的容器就不能直接访问到它们。一个容器可以属于多个网络,同一个自定义

网络下的容器可以通过各自的容器名访问到对方,因为会使用到docker内嵌的一个dns功能。

Docker提供三种自定义网络驱动

- bridge

- overlay

- macvlan

自定义bridge网络

5.1 创建一个自定义网络

创建一个叫作my_net的自定义网络

[root@docker-server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4cac3a734539 bridge bridge local

6505e9ea7ccb host host local

7ab639028591 none null local

[root@docker-server1 ~]# docker network create --driver bridge my_net #--driver用于指定网络类型

b1c2d9c1e522f55187ca9662846c598c1e6973dd75bda756846db1a83a9e317d

可以通过docker network ls 查看到新创建的my_net网络相关信息,Subnet表示这个网络下的子网IP段,那么基于my_net自定义网络创建的容器IP都会以该IP段开头。

[root@docker-server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4cac3a734539 bridge bridge local

6505e9ea7ccb host host local

b1c2d9c1e522 my_net bridge local

7ab639028591 none null local

宿主机也会多一个网卡

[root@docker-server1 ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP group default qlen

link/ether :0c:::dd: brd ff:ff:ff:ff:ff:ff

inet 192.168.132.131/ brd 192.168.132.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bcf9:af19:a325:e2c7/ scope link noprefixroute

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/ brd 192.168.0.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

: br-b1c2d9c1e522: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::cd:4a::4f brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/ brd 172.17.255.255 scope global br-b1c2d9c1e522

valid_lft forever preferred_lft forever

多创建一个网卡

[root@docker-server1 ~]# docker network create --driver bridge my_net2

ec4a8380b2d343dd468c7247c80e8c1349f6219dec6c19e3c4e71751981611b6

[root@docker-server1 ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN group default qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP group default qlen

link/ether :0c:::dd: brd ff:ff:ff:ff:ff:ff

inet 192.168.132.131/ brd 192.168.132.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bcf9:af19:a325:e2c7/ scope link noprefixroute

valid_lft forever preferred_lft forever

: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::3e:dd:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/ brd 192.168.0.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80:::3eff:fedd:/ scope link

valid_lft forever preferred_lft forever

: br-b1c2d9c1e522: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::cd:4a::4f brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global br-b1c2d9c1e522

valid_lft forever preferred_lft forever

: br-ec4a8380b2d3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN group default

link/ether ::c9:ba::ae brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-ec4a8380b2d3

valid_lft forever preferred_lft forever

5.2 基于my_net网络创建容器:

[root@docker-server1 ~]# docker run -d --net my_net httpd:2.4

[root@docker-server1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

dc64cedce326 httpd:2.4 "httpd-foreground" seconds ago Up seconds /tcp optimistic_engelbart

[root@docker-server1 ~]# docker run -it --net my_net busybox

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if103: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/ brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ip route

default via 172.17.0.1 dev eth0

172.17.0.0/ dev eth0 scope link src 172.17.0.3

通过指定子网和网关的方式创建自定义网络

5.3 通过指定子网和网关的方式创建my_net3网络

[root@docker-server1 ~]# docker network create --driver bridge --subnet 172.22.16.0/24 --gateway 172.22.16.1 my_net3

f42e46889a2ab8930e2dbd828206ebe3c94d68efd0f1fe5ccf042674e6a08189

[root@docker-server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4cac3a734539 bridge bridge local

6505e9ea7ccb host host local

b1c2d9c1e522 my_net bridge local

ec4a8380b2d3 my_net2 bridge local

f42e46889a2a my_net3 bridge local

7ab639028591 none null local

5.4 创建一个容器使用my_net3网络

[root@docker-server1 ~]# docker run -it --net my_net3 busybox

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if106: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.22.16.2/ brd 172.22.16.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ip route

default via 172.22.16.1 dev eth0

172.22.16.0/ dev eth0 scope link src 172.22.16.2

创建一个容器的使用my_net3网络的同时指定其ip地址

[root@docker-server1 ~]# docker run -it --network=my_net3 --ip=172.22.16.8 busybox

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if108: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.22.16.8/ brd 172.22.16.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ip route

default via 172.22.16.1 dev eth0

172.22.16.0/ dev eth0 scope link src 172.22.16.8

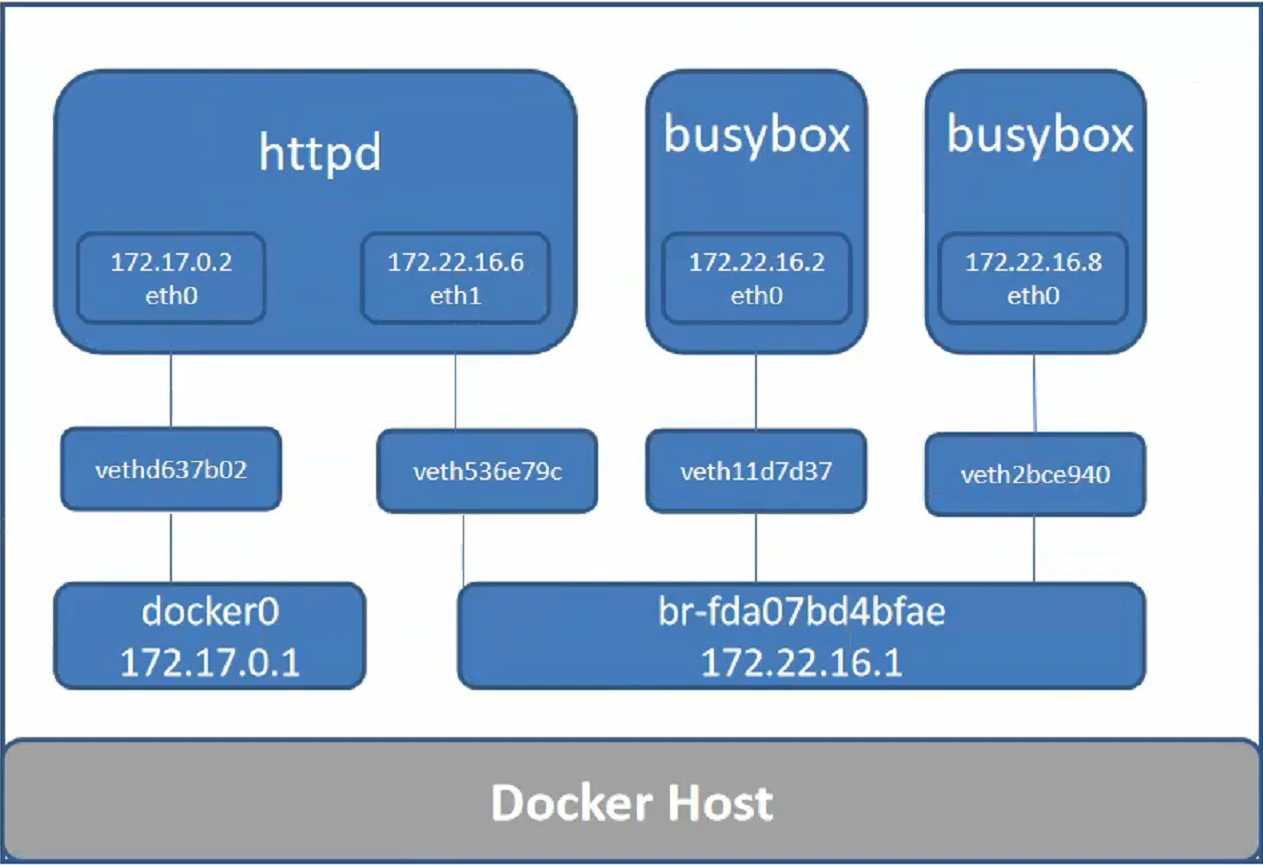

5.5 使用自定义网络与默认网络互通

清掉所有容器

[root@docker-server1 ~]# docker ps -qa |xargs docker rm -f

假设我们在默认的bridge网络中,还有一个busybox的容器:

此时默认网络中的容器与my_net2网络中的容器是无法互相通信的。宿主机上网络结构如下:

以默认网卡创建一个容器

[root@docker-server1 ~]# docker run -it busybox /bin/sh

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if112: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.2/ brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

自定义网卡创建容器

[root@docker-server1 ~]# docker run -it --net my_net busybox /bin/sh

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if116: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/ brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@docker-server1 ~]# docker run -it --net my_net busybox /bin/sh

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if118: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/ brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): data bytes

bytes from 172.17.0.2: seq= ttl= time=0.374 ms

bytes from 172.17.0.2: seq= ttl= time=0.125 ms

^C

--- 172.17.0.2 ping statistics ---

packets transmitted, packets received, % packet loss

round-trip min/avg/max = 0.125/0.249/0.374 ms

/ # ping 192.168.0.2

PING 192.168.0.2 (192.168.0.2): data bytes

如果想让默认bridge网络的busybox与my_net中的容器通信,可以给容器添加一块自定义网络的网卡,使用以下方式

查看容器信息

[root@docker-server1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8423a78ce12d busybox "/bin/sh" minutes ago Up minutes xenodochial_pasteur

cbc45429caa8 busybox "/bin/sh" minutes ago Up minutes competent_poitras

623ae56a899c busybox "/bin/sh" minutes ago Up minutes exciting_visvesvaraya

相当于是给623ae56a899c容器添加my_net网卡

[root@docker-server1 ~]# docker network connect my_net 623ae56a899c

再查看容器的网卡

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if112: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.2/ brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

: eth1@if120: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.4/ brd 172.17.255.255 scope global eth1

valid_lft forever preferred_lft forever

/ # ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): data bytes

bytes from 172.17.0.2: seq= ttl= time=0.195 ms

bytes from 172.17.0.2: seq= ttl= time=0.073 ms

^C

--- 172.17.0.2 ping statistics ---

packets transmitted, packets received, % packet loss

round-trip min/avg/max = 0.073/0.134/0.195 ms

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): data bytes

bytes from 172.17.0.3: seq= ttl= time=0.194 ms

bytes from 172.17.0.3: seq= ttl= time=0.072 ms

可是my_net依然不能和默认bridge通信

在添加

[root@docker-server1 ~]# docker network connect bridge cbc45429caa8

第二个容器都可通信

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if116: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/ brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

: eth1@if122: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::c0:a8:: brd ff:ff:ff:ff:ff:ff

inet 192.168.0.3/ brd 192.168.0.255 scope global eth1

valid_lft forever preferred_lft forever

/ # ping 192.168.0.2

PING 192.168.0.2 (192.168.0.2): data bytes

bytes from 192.168.0.2: seq= ttl= time=0.288 ms

bytes from 192.168.0.2: seq= ttl= time=0.147 ms

5.6 删掉网卡

如果要将webserver新添加的这块网卡移除,可以使用如下命令:

[root@docker-server1 ~]# docker network disconnect bridge cbc45429caa8

/ # ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

: eth0@if116: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu qdisc noqueue

link/ether ::ac::: brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/ brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 192.168.0.2 #又不能通信了

PING 192.168.0.2 (192.168.0.2): data bytes

同一台宿主机容器互联

同一台宿主机上的容器互联有两种方式,第一种是基于ip,默认情况下,同一个宿主机上的容器ip是互通的。另一种方式是使用--link实现:

docker run -d --name db1 -e "MYSQL_ROOT_PASSWORD=123456" -P mysql:5.6

docker run -d --link db1:db1 --name webserver1 httpd:2.4

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!

最新文章

- Android Studio vs. Eclipse ADT Comparison

- Backbone入门讲解

- 使用D3制作图表(1)--画布绘制

- 验证中文、英文、电话、手机、邮箱、数字、数字和字母、Url地址和Ip地址的正则表达式

- BZOJ 1610 连线游戏

- django - django 承接nginx请求

- hdoj 3062 Party(2-SAT)

- 基于visual Studio2013解决C语言竞赛题之1034数组赋值

- jQuery EasyUI的使用入门

- 虚拟机联网及远程连接-Linux基础环境命令学习笔记

- 0426html常用标签属性

- Postman代码测试工具如何用?

- JObject,JArray的基本操作

- Linux环境变量总结

- python 中的 list dict 与 set 的关系

- IP路由配置之---------dhcp服务器配置

- for循环实例

- Linux CPU Hotplug CPU热插拔

- nginx+tomcat抵御慢速连接攻击

- uva-10112-计算几何