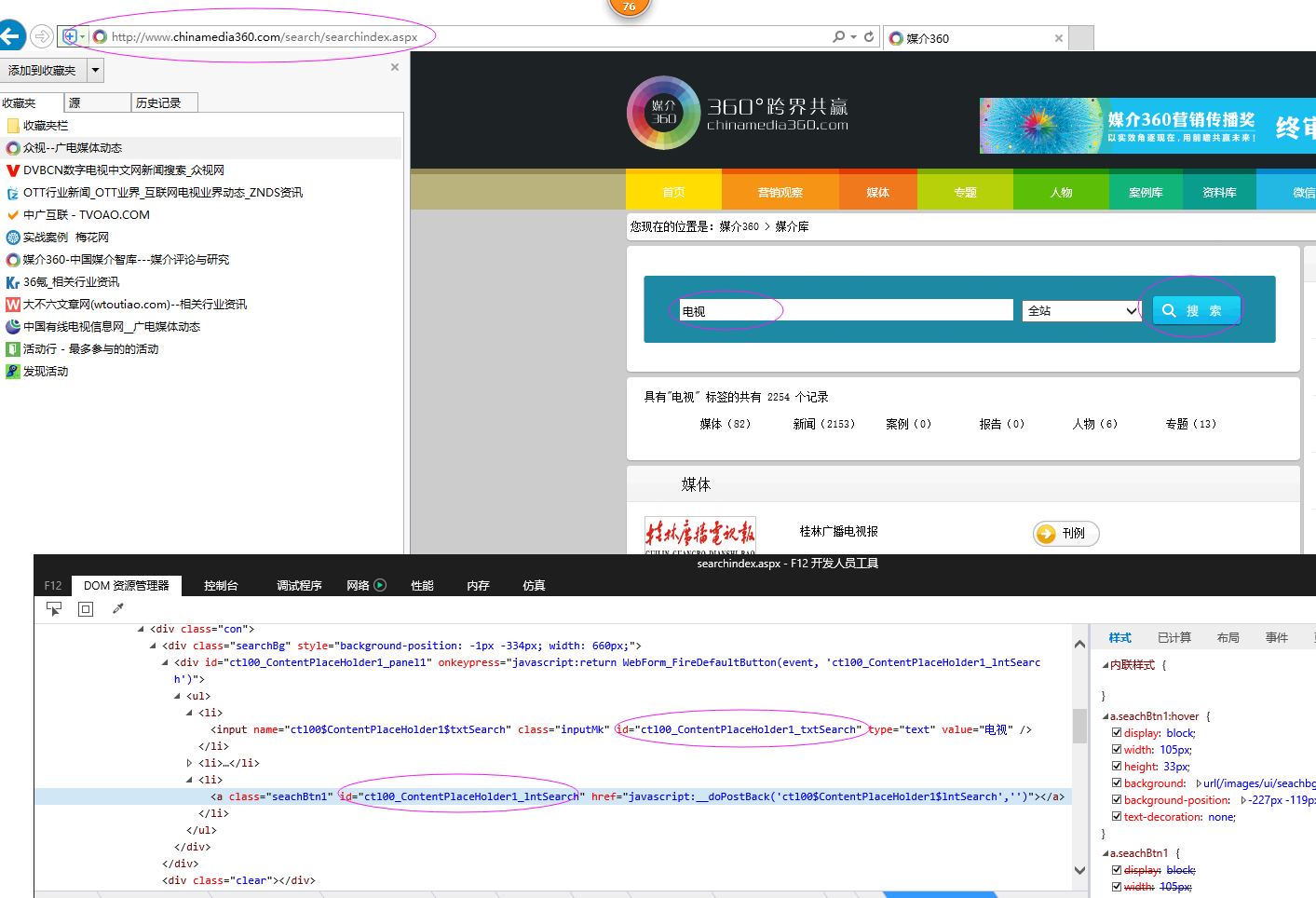

[Python爬虫] 之十四:Selenium +phantomjs抓取媒介360数据

2024-08-28 19:36:26

具体代码如下:

# coding=utf-8

import os

import re

from selenium import webdriver

import selenium.webdriver.support.ui as ui

from selenium.webdriver.common.keys import Keys

import time

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support.select import Select

import IniFile

from selenium.webdriver.common.keys import Keys

from threading import Thread

import thread

import LogFile

import urllib

import mongoDB

#抓取数据线程类

class ScrapyData_Thread(Thread):

#抓取数据线程类

def __init__(self,webSearchUrl,inputTXTIDLabel,searchlinkIDLabel,htmlLable,htmltime,keyword,invalid_day,db):

'''

构造函数

:param webSearchUrl: 搜索页url

:param inputTXTIDLabel: 搜索输入框的标签

:param searchlinkIDLabel: 搜索链接的标签

:param htmlLable: 要搜索的标签

:param htmltime: 要搜索的时间

:param invalid_day: 要搜索的关键字,多个关键字中间用分号(;)隔开

:param keywords: #资讯发布的有效时间,默认是3天以内

:param db: 保存数据库引擎

'''

Thread.__init__(self) self.webSearchUrl = webSearchUrl

self.inputTXTIDLabel = inputTXTIDLabel

self.searchlinkIDLabel = searchlinkIDLabel

self.htmlLable = htmlLable

self.htmltime = htmltime

self.keyword = keyword

self.invalid_day = invalid_day

self.db = db self.driver = webdriver.PhantomJS()

self.wait = ui.WebDriverWait(self.driver, 20)

self.driver.maximize_window() def Comapre_to_days(self,leftdate, rightdate):

'''

比较连个字符串日期,左边日期大于右边日期多少天

:param leftdate: 格式:2017-04-15

:param rightdate: 格式:2017-04-15

:return: 天数

'''

l_time = time.mktime(time.strptime(leftdate, '%Y-%m-%d'))

r_time = time.mktime(time.strptime(rightdate, '%Y-%m-%d'))

result = int(l_time - r_time) / 86400

return result def run(self):

print '关键字:%s' % self.keyword self.driver.get(self.webSearchUrl)

time.sleep(1) # js = "var obj = document.getElementById('ctl00_ContentPlaceHolder1_txtSearch');obj.value='" + self.keyword + "';"

# self.driver.execute_script(js)

# 点击搜索链接

ss_elements = self.driver.find_element_by_id(self.inputTXTIDLabel)

ss_elements.send_keys(unicode(self.keyword,'utf8')) search_elements = self.driver.find_element_by_id(self.searchlinkIDLabel)

search_elements.click()

time.sleep(4) self.wait.until(lambda driver: self.driver.find_elements_by_xpath(self.htmlLable))

Elements = self.driver.find_elements_by_xpath(self.htmlLable) timeElements = self.driver.find_elements_by_xpath(self.htmltime)

urlList = []

for hrefe in Elements:

urlList.append(hrefe.get_attribute('href').encode('utf8')) index = 0

strMessage = ' '

strsplit = '\n------------------------------------------------------------------------------------\n'

index = 0

# 每页中有用记录

recordCount = 0

usefulCount = 0

meetingList = []

kword = self.keyword

currentDate = time.strftime('%Y-%m-%d') for element in Elements: strDate = timeElements[index].text.encode('utf8')

# 日期在3天内并且搜索的关键字在标题中才认为是复合要求的数据

#因为搜索的记录是安装时间倒序,所以如果当前记录的时间不在3天以内,那么剩下的记录肯定是小于当前日期的,所以就退出

if self.Comapre_to_days(currentDate,strDate) < self.invalid_day:

usefulCount = 1

txt = element.text.encode('utf8')

if txt.find(kword) > -1:

dictM = {'title': txt, 'date': strDate,

'url': urlList[index], 'keyword': kword, 'info': txt}

meetingList.append(dictM) # print ' '

# print '标题:%s' % txt

# print '日期:%s' % strDate

# print '资讯链接:' + urlList[index]

# print strsplit # # log.WriteLog(strMessage)

recordCount = recordCount + 1

else:

usefulCount = 0 if usefulCount:

break

index = index + 1 self.db.SaveMeetings(meetingList)

print "共抓取了: %d 个符合条件的资讯记录" % recordCount self.driver.close()

self.driver.quit() if __name__ == '__main__': configfile = os.path.join(os.getcwd(), 'chinaMedia.conf')

cf = IniFile.ConfigFile(configfile)

webSearchUrl = cf.GetValue("section", "webSearchUrl")

inputTXTIDLabel = cf.GetValue("section", "inputTXTIDLabel")

searchlinkIDLabel = cf.GetValue("section", "searchlinkIDLabel")

htmlLable = cf.GetValue("section", "htmlLable")

htmltime = cf.GetValue("section", "htmltime") invalid_day = int(cf.GetValue("section", "invalid_day")) keywords= cf.GetValue("section", "keywords")

keywordlist = keywords.split(';')

start = time.clock()

db = mongoDB.mongoDbBase()

for keyword in keywordlist:

if len(keyword) > 0:

t = ScrapyData_Thread(webSearchUrl,inputTXTIDLabel,searchlinkIDLabel,htmlLable,htmltime,keyword,invalid_day,db)

t.setDaemon(True)

t.start()

t.join() end = time.clock()

print "整个过程用时间: %f 秒" % (end - start)

最新文章

- fonts.useso.com 访问变慢

- 采集数据和memchche的存储使用,分页展示

- Docker之Linux UnionFS

- Servlet/JSP-03 HttpServlet

- c#读取文本文档实践4-读入到list泛型集合计算后写入新文档

- 安卓手机与电脑无线传输文件(利用ftp服务)

- 某PHP代码加密

- 重新开始学习javase_类再生(类的合成和继承)

- c# datagridviewcomboboxcell值无效的解决办法

- Python3网络爬虫

- ubuntu命令行下java工程编辑与算法(第四版)环境配置

- View滑动的常见方式

- 如何打开hprof文件

- Spring 整合WebSocket, Error during WebSocket handshake: Unexpected response code: 302 还有200的错误

- Apache动态加载模块

- Project with Match in aggregate not working in mongodb

- Kafka 配置安装

- phpstorm 不能自动打开上次的历史文件

- Minimum Increment to Make Array Unique LT945

- [LeetCode&Python] Problem 350. Intersection of Two Arrays II