caffe卷积层实现

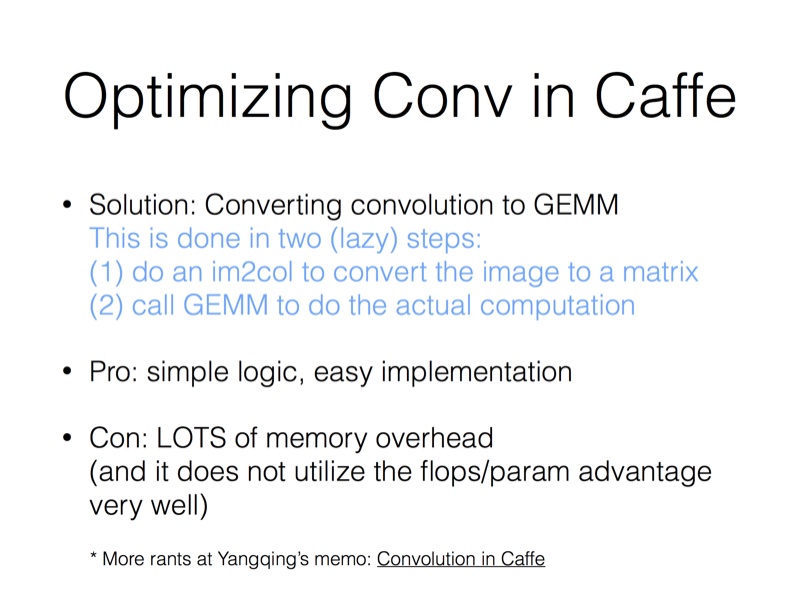

下图是jiayangqing在知乎上的回答,其实过程就是把image转换成矩阵,然后进行矩阵运算

卷积的实现在conv_layer层,conv_layer层继承了base_conv_layer层,base_conv_layer层是卷积操作的基类,包含卷积和反卷积.conv_layer层的前向传播是通过forward_cpu_gemm函数实现,这个函数在vision_layer.hpp里进行了定义,在base_conv_layer.cpp里进行了实现.forward_cpu_gemm函数调用了caffe_cpu_gemm和conv_im2col_cpu,caffe_cpu_gemm的实现在util/math_function.cpp里,conv_im2col_cpu的实现在util/im2col.cpp里

conv_layer层的前向传播代码,先调forward_cpu_gemm函数进行卷积的乘法运算,然后根据是否需要bias项,调用forward_cpu_bias进行加法运算.

template <typename Dtype>

void ConvolutionLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* weight = this->blobs_[]->cpu_data();

for (int i = ; i < bottom.size(); ++i) {

const Dtype* bottom_data = bottom[i]->cpu_data();

Dtype* top_data = top[i]->mutable_cpu_data();

for (int n = ; n < this->num_; ++n) {

this->forward_cpu_gemm(bottom_data + bottom[i]->offset(n), weight,

top_data + top[i]->offset(n));

if (this->bias_term_) {

const Dtype* bias = this->blobs_[]->cpu_data();

this->forward_cpu_bias(top_data + top[i]->offset(n), bias);

}

}

}

}

num_在vision_layers.hpp中的BaseConvolutionLayer类中定义,表示batchsize(https://blog.csdn.net/sinat_22336563/article/details/69808612,这个博客给做了说明).也就是说.每一层卷积是先把一个batch所有的数据计算完才传给下一层,不是把batch中的一个在整个网络中计算一次,再把batch中的下一个传进整个网络进行计算.这里的bottom[i]->offset(n),相当于指向一个batch中下一个图片或者feature map的内存地址,即对batch中下一个进行计算

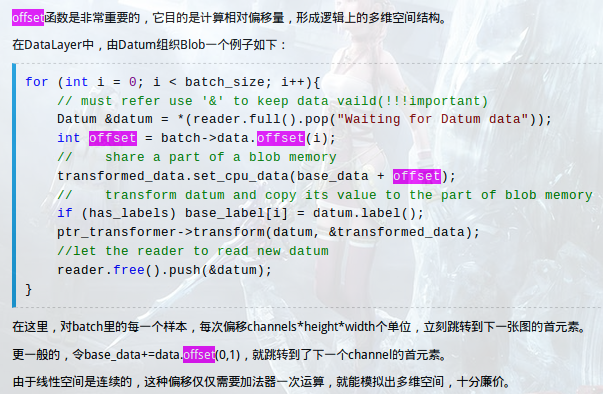

offset在blob.hpp中定义.bottom、top都是blob类,所以可以去调用blob这个类的属性或者方法,看具体实现的时候直接去看这个类怎么实现的就OK.

inline int offset(const int n, const int c = , const int h = ,

const int w = ) const {

CHECK_GE(n, );

CHECK_LE(n, num());

CHECK_GE(channels(), );

CHECK_LE(c, channels());

CHECK_GE(height(), );

CHECK_LE(h, height());

CHECK_GE(width(), );

CHECK_LE(w, width());

return ((n * channels() + c) * height() + h) * width() + w;

} inline int offset(const vector<int>& indices) const {

CHECK_LE(indices.size(), num_axes());

int offset = ;

for (int i = ; i < num_axes(); ++i) {

offset *= shape(i);

if (indices.size() > i) {

CHECK_GE(indices[i], );

CHECK_LT(indices[i], shape(i));

offset += indices[i];

}

}

return offset;

}

https://www.cnblogs.com/neopenx/p/5294682.html

forward_cpu_gemm、forward_cpu_bias函数的实现.is_1x1_用来判断是不是1x1的卷积操作,skip_im2col用来判断是不是需要把图片转换成矩阵

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_cpu_gemm(const Dtype* input,

const Dtype* weights, Dtype* output, bool skip_im2col) {

const Dtype* col_buff = input;

if (!is_1x1_) {

if (!skip_im2col) {

conv_im2col_cpu(input, col_buffer_.mutable_cpu_data());

}

col_buff = col_buffer_.cpu_data();

}

for (int g = ; g < group_; ++g) {

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, conv_out_channels_ /

group_, conv_out_spatial_dim_, kernel_dim_ / group_,

(Dtype)., weights + weight_offset_ * g, col_buff + col_offset_ * g,

(Dtype)., output + output_offset_ * g);

}

} template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_cpu_bias(Dtype* output,

const Dtype* bias) {

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num_output_,

height_out_ * width_out_, , (Dtype)., bias, bias_multiplier_.cpu_data(),

(Dtype)., output);

}

conv_im2col_cpu

inline void conv_im2col_cpu(const Dtype* data, Dtype* col_buff) {

im2col_cpu(data, , conv_in_channels_, conv_in_height_, conv_in_width_,

kernel_h_, kernel_w_, pad_h_, pad_w_, stride_h_, stride_w_,

, , col_buff);

}

template <typename Dtype>

void im2col_cpu(const Dtype* data_im, const int channels,

const int height, const int width, const int kernel_h, const int kernel_w,

const int pad_h, const int pad_w,

const int stride_h, const int stride_w,

const int dilation_h, const int dilation_w,

Dtype* data_col) {

const int output_h = (height + * pad_h -

(dilation_h * (kernel_h - ) + )) / stride_h + ;

const int output_w = (width + * pad_w -

(dilation_w * (kernel_w - ) + )) / stride_w + ;

const int channel_size = height * width;

for (int channel = channels; channel--; data_im += channel_size) {

for (int kernel_row = ; kernel_row < kernel_h; kernel_row++) {

for (int kernel_col = ; kernel_col < kernel_w; kernel_col++) {

int input_row = -pad_h + kernel_row * dilation_h;

for (int output_rows = output_h; output_rows; output_rows--) {

if (!is_a_ge_zero_and_a_lt_b(input_row, height)) {

for (int output_cols = output_w; output_cols; output_cols--) {

*(data_col++) = ;

}

} else {

int input_col = -pad_w + kernel_col * dilation_w;

for (int output_col = output_w; output_col; output_col--) {

if (is_a_ge_zero_and_a_lt_b(input_col, width)) {

*(data_col++) = data_im[input_row * width + input_col];

} else {

*(data_col++) = ;

}

input_col += stride_w;

}

}

input_row += stride_h;

}

}

}

}

}

caffe_cpu_gemm函数的实现.cblas_sgemm是cblas库的一个函数(# inclue<cblas.h>),

template<>

void caffe_cpu_gemm<float>(const CBLAS_TRANSPOSE TransA,

const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K,

const float alpha, const float* A, const float* B, const float beta,

float* C) {

int lda = (TransA == CblasNoTrans) ? K : M;

int ldb = (TransB == CblasNoTrans) ? N : K;

cblas_sgemm(CblasRowMajor, TransA, TransB, M, N, K, alpha, A, lda, B,

ldb, beta, C, N);

} template<>

void caffe_cpu_gemm<double>(const CBLAS_TRANSPOSE TransA,

const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K,

const double alpha, const double* A, const double* B, const double beta,

double* C) {

int lda = (TransA == CblasNoTrans) ? K : M;

int ldb = (TransB == CblasNoTrans) ? N : K;

cblas_dgemm(CblasRowMajor, TransA, TransB, M, N, K, alpha, A, lda, B,

ldb, beta, C, N);

} template<>

void caffe_cpu_gemm<Half>(const CBLAS_TRANSPOSE TransA,

const CBLAS_TRANSPOSE TransB, const int M, const int N, const int K,

const Half alpha, const Half* A, const Half* B, const Half beta,

Half* C) {

DeviceMemPool& pool = Caffe::Get().cpu_mem_pool();

float* fA = (float*)pool.Allocate(M*K*sizeof(float));

float* fB = (float*)pool.Allocate(K*N*sizeof(float));

float* fC = (float*)pool.Allocate(M*N*sizeof(float));

halves2floats_cpu(A, fA, M*K);

halves2floats_cpu(B, fB, K*N);

halves2floats_cpu(C, fC, M*N);

caffe_cpu_gemm<float>(TransA, TransB, M, N, K, float(alpha), fA, fB, float(beta), fC);

floats2halves_cpu(fC, C, M*N);

pool.Free((char*)fA);

pool.Free((char*)fB);

pool.Free((char*)fC);

}

最新文章

- 3*n/2 - 2

- btrace使用

- Leetcode Word Ladder

- jmeter agent配置

- apache与nginx的虚拟域名配置

- 配置和创建一个hibernate简单应用

- .net架构设计读书笔记--第二章 设计体系结构

- win7+ubuntu双系统中卸载ubuntu方法

- 面向侧面的程序设计AOP-------《一》概述

- 2016 Multi-University Training Contest 10

- cnblogs体验

- 《疯狂java讲义》笔记 1-5章

- FE: Sass and Bootstrap 3 with Sass

- JAVA FILE or I/O学习 - File学习

- [置顶] head first 设计模式之----Observer pattern

- Android开发——使用LitePal开源数据库

- 树状数组-HDU1541-Stars一维树状数组 POJ1195-Mobile phones-二维树状数组

- [C]simple code of count input lines,words,chars

- SQLServer之删除用户定义的数据库角色

- ASP.NET 4.0验证请求 System.Web.HttpRequestValidationException: A potentially dangerous Request.F