python之crawlspider初探

2024-09-05 06:31:28

注意点:

"""

1、用命令创建一个crawlspider的模板:scrapy genspider -t crawl <爬虫名> <all_domain>,也可以手动创建

2、CrawlSpider中不能再有以parse为名字的数据提取方法,这个方法被CrawlSpider用来实现基础url提取等功能

3、一个Rule对象接受很多参数,首先第一个是包含url规则的LinkExtractor对象,

常有的还有callback(制定满足规则的解析函数的字符串)和follow(response中提取的链接是否需要跟进)

4、不指定callback函数的请求下,如果follow为True,满足rule的url还会继续被请求

5、如果多个Rule都满足某一个url,会从rules中选择第一个满足的进行操作

"""

1、创建工程

scrapy startproject zjh

2、创建项目

scrapy genspider -t crawl circ bxjg.circ.gov.cn

与scrapy不同的是添加了-t crawl参数

3、settings文件添加日志级别,USER_AGENT

# -*- coding: utf-8 -*- # Scrapy settings for zjh project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'zjh' SPIDER_MODULES = ['zjh.spiders']

NEWSPIDER_MODULE = 'zjh.spiders' LOG_LEVEL = "WARNING"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'zjh (+http://www.yourdomain.com)'

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default)

#COOKIES_ENABLED = False # Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False # Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#} # Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'zjh.middlewares.ZjhSpiderMiddleware': 543,

#} # Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'zjh.middlewares.ZjhDownloaderMiddleware': 543,

#} # Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#} # Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'zjh.pipelines.ZjhPipeline': 300,

#} # Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

4、circ.py文件提取数据

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule import re

class CircSpider(CrawlSpider):

name = 'circ'

allowed_domains = ['bxjg.circ.gov.cn']

start_urls = ['http://bxjg.circ.gov.cn/web/site0/tab5240/module14430/page1.htm'] #定义提取url地址规则

rules = (

#一个Rule一条规则,LinkExtractor表示链接提取器,提取url地址

#allow,提取的url,url不完整,但是crawlspider会帮我们补全,然后再请求

#callback 提取出来的url地址的response会交给callback处理

#follow 当前url地址的响应是否重新将过rules来提取url地址

Rule(LinkExtractor(allow=r'/web/site0/tab5240/info\d+\.htm'), callback='parse_item'), #详情页数据,不需要follow

Rule(LinkExtractor(allow=r'/web/site0/tab5240/module14430/page\d+\.htm'),follow=True), # 下一页,不需要callback处理,但是需要follow不断循环翻页 )

#parse函数有特殊功能,不能定义

def parse_item(self, response):

item = {}

item["title"]= re.findall("<!--TitleStart-->(.*?)<!--TitleEnd-->",response.body.decode())[0]

item["publish_date"] =re.findall("发布时间:20\d{2}-\d{2}-\d{2}",response.body.decode())[0]

print(item)

#也可以使用Request()自动构造请求

# yield scrapy.Request(

# url,

# callback=parse_detail

# meta={"item":item}

# )

def parse_detail(self,response):

pass

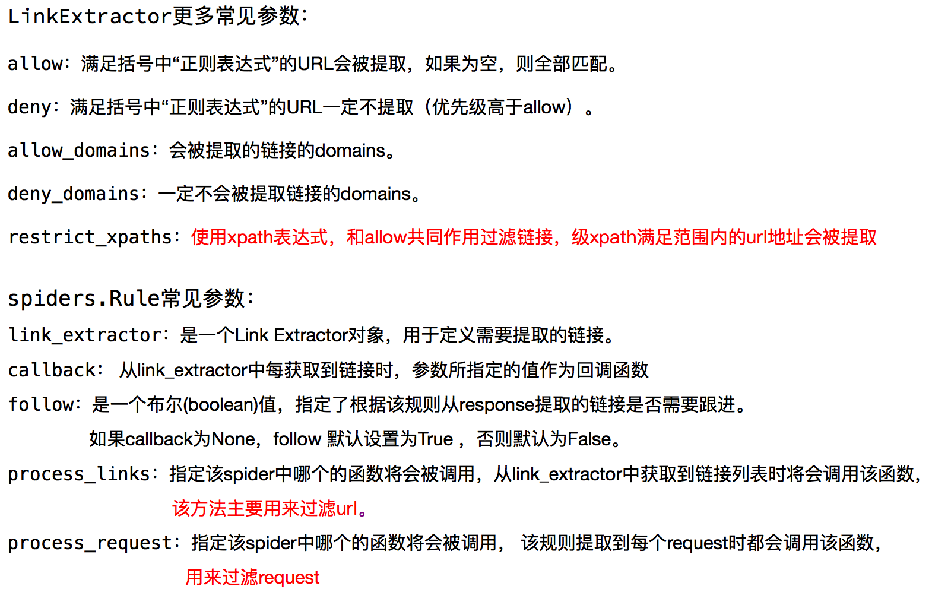

5、扩展知识

最新文章

- Socket请求和Http请求的各自特点、区别及适用场景

- 【转】virtualenv -- python虚拟沙盒

- sh脚本异常:bad interpreter: No such file or directory

- 剑指Offer07 斐波那契数列

- UNIX基础知识之系统调用与库函数的区别与联系

- attempt to write a readonly database 的解决办法

- python - ImportError: No module named http.cookies error when installing cherrypy 3.2 - Stack Overflow

- 管中窥豹——从对象的生命周期梳理JVM内存结构、GC调优、类加载、AOP编程及性能监控

- [转]Oracle High Water Level高水位分析

- 网站开发进阶(二十一)Angular项目信息错位显示问题解决

- IO 和 NIO 的思考

- 第二周博客作业 <西北师范大学| 周安伟>

- Kotlin 基础语法

- 3.3Python数据处理篇之Numpy系列(三)---数组的索引与切片

- [转] BootStrap table增加一列显示序号

- Error:No toolchains found in the NDK toolchains folder for ABI with prefix: mips64el-linux-android

- (转) argparse — 解析命令参数和选项

- Operating System Error Codes

- 网络监测 断网 网速 ping 完整案例 MD

- 秒杀怎么样才可以防止超卖?基于mysql的事务和锁实现